Hey all, it has been a pretty busy week this week with all things Lemmy.zip and I'm excited to tell you all about it! If you haven't read it yet, part 1 is here.

Monday

Monday morning was quite quiet. At the end of part 1 last week, i mentioned emails had gone down and we weren't able to send emails out. As a result, we've permanently moved email provider now to Brevo, which while the graph isn't so pretty, they seem to work fine as a provider.

I suddenly noticed that the user count for the instance had shot up by 20 people. Refreshed again, another 20 people had signed up. This didn't seem organic, so I jumped on to Brevo and noticed that all the new email registrations were bouncing, which means the emails didn't exist. I turned registration off for the instance, until I could put in another layer of security with manual applications. Registration was re-enabled, and suddenly the bots were no longer able to join. Because email verification has always been required, these bots will never be able to post spam to the instance.

Here is a lovely graph of emails for you:

The red spike shows the bounce rate, which correlates with the spike in sent emails.

The red spike shows the bounce rate, which correlates with the spike in sent emails.

It is around this time that I noticed the server storage is filling up quickly.

Tuesday

I investigated the storage issue, and discovered that images are caching from other servers too, so we're on track to exceed our server storage pretty quickly.

After some lengthy googling and reading migration processes, I finally have chosen an image storage provider (Backblaze B2 buckets) and thankfully Pictrs, the software that handles the images, has a release-candidate update that allows for image migration to an external host.

The first step was to upgrade the database to prepare for external migration, push the existing storage to the new host, then update the docker container environment to point to the new storage location. All is well I think, I can see the storage is starting to be used, but suddenly all the images stop appearing.

Somehow (and I still don't know how) the database upgrade and original migration to the external host had failed, and instead we were pushing new images to the external host, but existing images were cut off from the database and had never been transferred over.

Thankfully, the Pictrs software dev is a pretty chill person who very kindly bug fixed it and pushed a new release candidate container which fixed this issue (I re-ran migration and the new version allowed skipping files which had already been uploaded to the new storage host so there was no conflict and the database would merge nicely).

This took quite a while, so images were not showing for about 12 hours I think. Sorry 😔

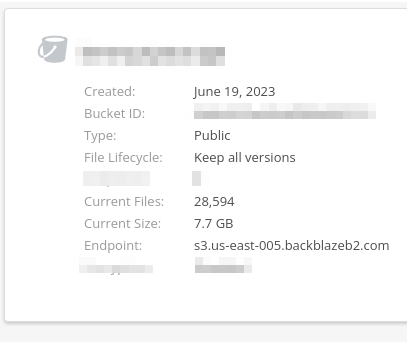

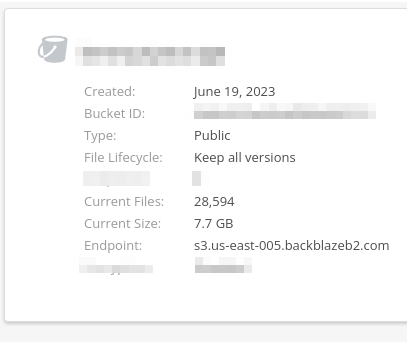

This is the file and storage stats in our images database as of today. Thankfully they're fully separated from the main server now, where storage is hell of a lot cheaper than on our main cloud host.

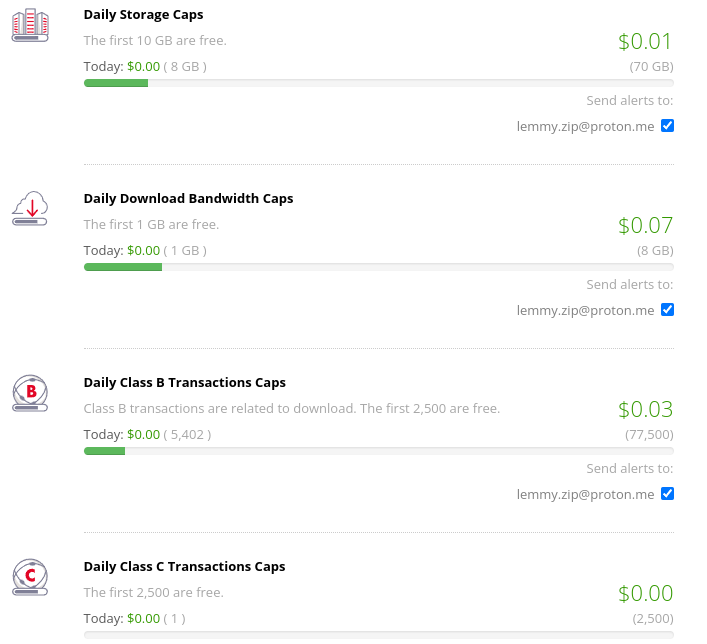

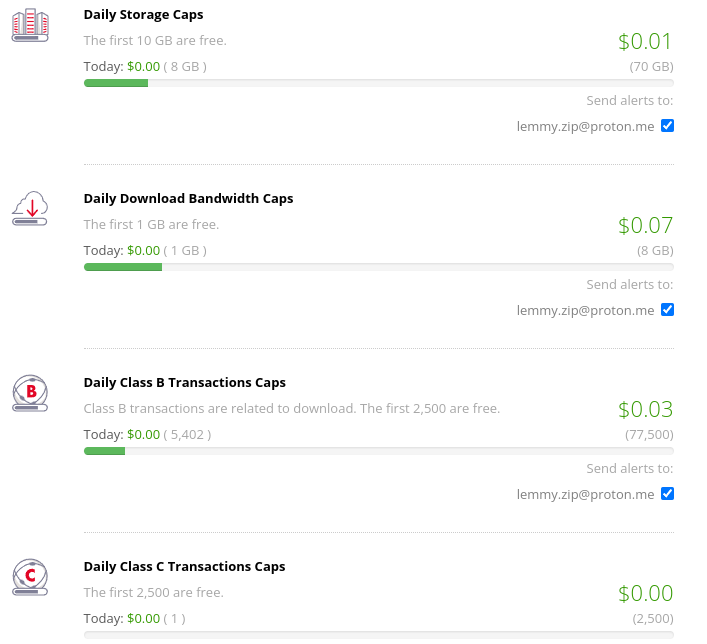

There is quite a small cost attached to this though. In a week its cost me $0.12 so not masses. Backblaze allows setting caps on usage. Unfortunately I can't show you what the full usage compared to the cap is, because you can't go back in time apparently, but this is what the caps screen looks like:

I have set the caps quite high in comparison what we actually use, as far as I can tell, but the last thing I want is storage capping out and everything breaking again!

On this day, I also appointed Sami as an admin for Lemmy.zip. They've been great, helped me to keep on top of applications to the server, and also helped to create better logos for the instance communities. They also did all the leg work for the slur filter, which I will touch on later, plus has been keeping on top of the bot situation for us and making sure we're safe.

If you haven't already you can pop in here to say hi to Sami :)

Wednesday

First thing Wednesday was the creation of the "Home" community. Many other instances have "Main" or similar, but the purpose is the same, to have a central discussion point for the server. It is open to everyone to post in, including questions or issues with the server, or just to say hi. I'll also put posts like this one in here, and save Announcements for bigger actual announcements or emergency things.

Also Wednesday, in an effort to speed up the site but also to reduce bandwidth costs from the the new image server, I set up Cloudflare for the instance. The initial change of DNS seemed fine on my part, I don't think there were any issues but as with all things DNS sometimes things do go wrong until it has propagated, so apologies if the site was down for you during this time.

What confused me massively was that suddenly, all the images went down again. Cue mild panic attack as I couldn't work out why this was happening, until the very helpful Pictrs dev looked at my logs and pointed out that the server was returning a 500 code, which meant it had timed out. Thankfully (for me) it turned out Backblaze had gone down and they'd just been really slow at updating their status page. Images kicked back in a few hours later once they'd resolved their issue.

Thursday

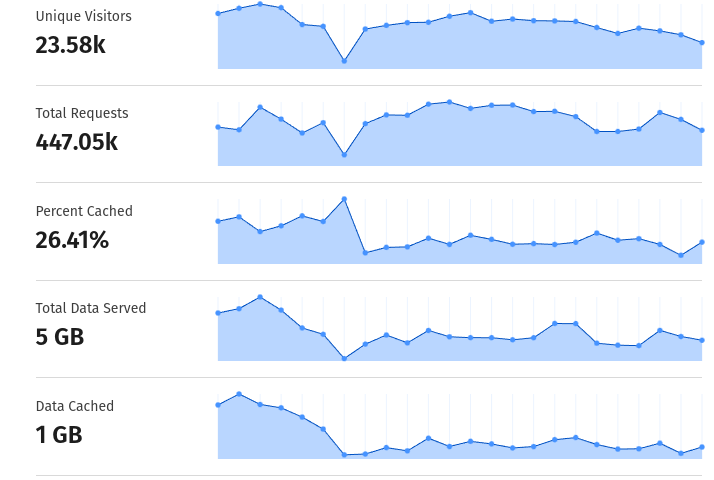

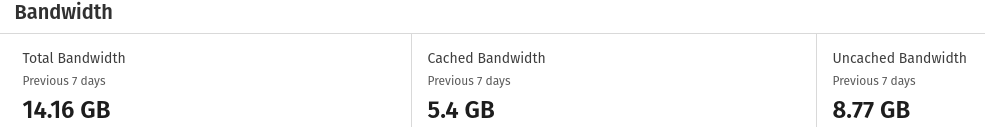

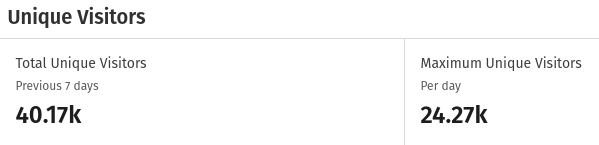

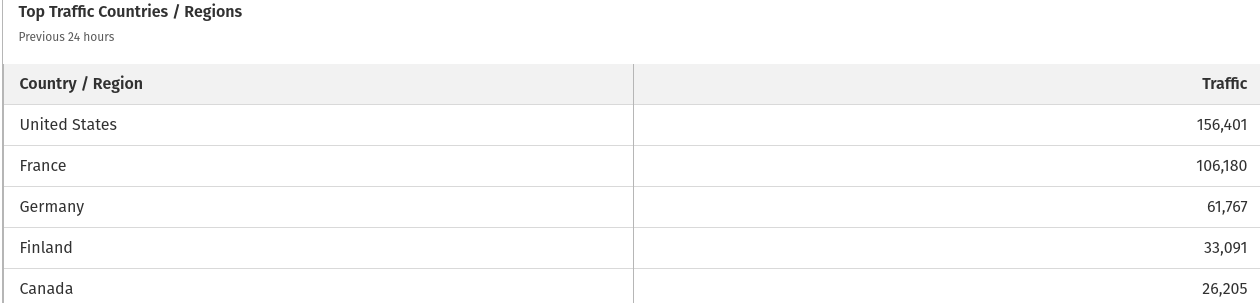

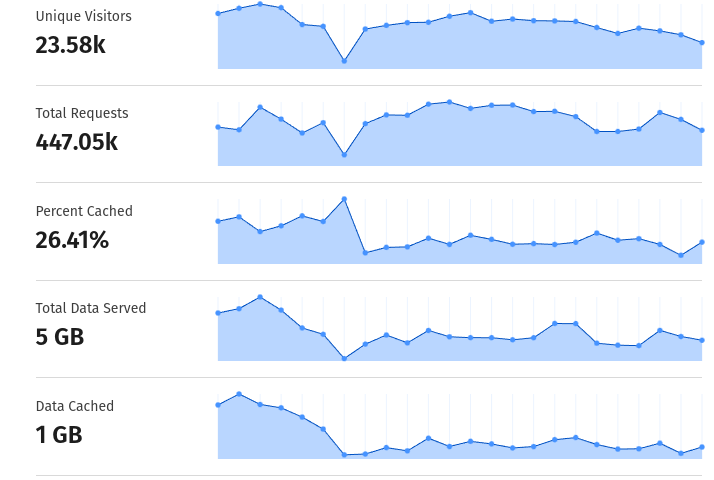

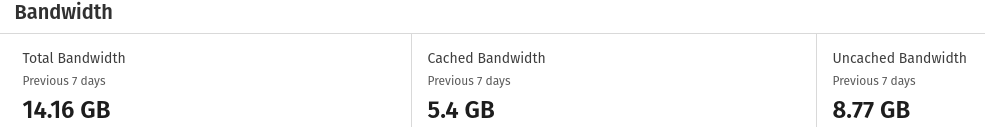

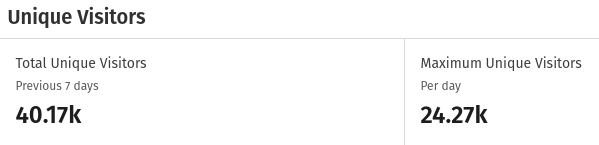

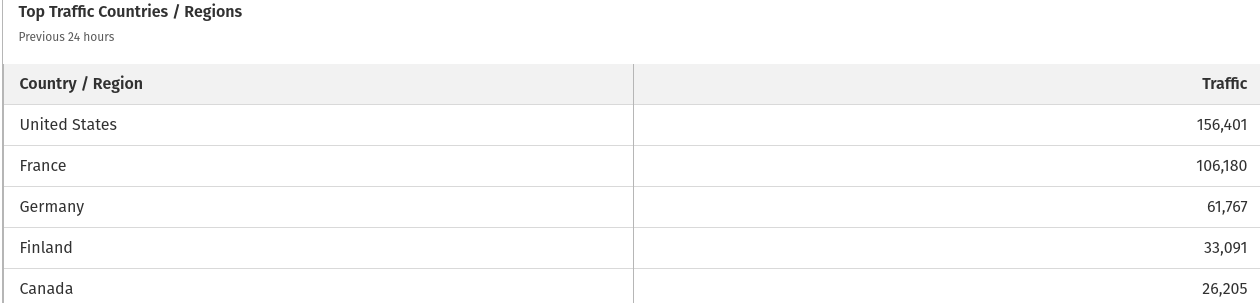

Since then, Cloudflare has been working pretty well. Here are some pretty graphs for you:

This is cloudflare usage over the last 24 hours - the drop is related to the 0.18 upgrade.

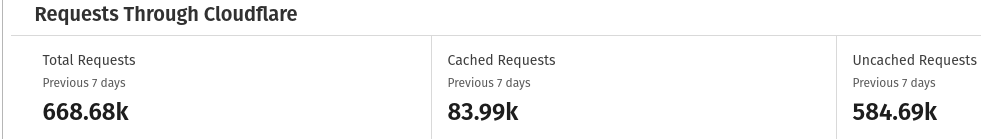

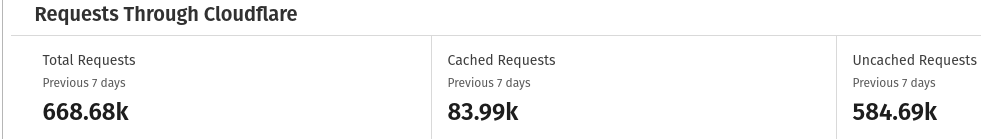

Requests through cloudflare since enabling it

Requests through cloudflare since enabling it

Bandwidth usage & savings via caching

Bandwidth usage & savings via caching

Unique visitors to the site.

Traffic per region over the last 24 hours (US has pulled ahead again, France almost overtook them!)

Traffic per region over the last 24 hours (US has pulled ahead again, France almost overtook them!)

If there are any other stats you're interested in seeing let me know.

Friday

On Friday, Sami had done all the hard work to get the slur filter in place. We both feel that the normal swear words, i.e. fuck, are fine on this instance, but that racism & bigotry isn't something we'll tolerate. It will only apply to posts on this instance (obviously we don't control what other instances do) and we'll monitor the usage of said words and enforce the rules where needed.

Also, Sami noticed 0.18 had been officially released, so I went about preparing to upgrade the server (we use the ansible script with some tweaks, so I had to move them over to the new config first).

However on installation I was met with a server error and for about an hour the site was down while I troubleshot the issue. It was a global issue with the ansible script, which had changed the docker containers to use an internal and external network, however this stopped the pictrs container and lemmy-ui container from accessing the internet, which broke the connectivity of the site. There was also a bug with site icons that had to be deleted via the database. You can see the troubleshooting steps here

With the fix in place site came back up. I cleared the cloudflare cache for good measure, and thankfully we've pretty stable on 0.18 since.

To check this, I've added a status page on an external site so if you ever can't access the site, you can check if its working at that link.

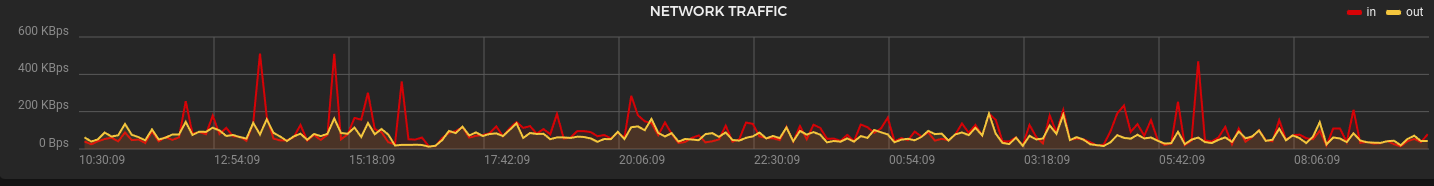

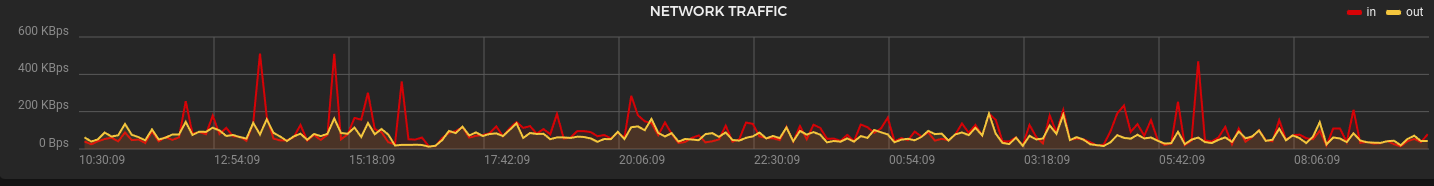

Here are some more lovely graphs for you!

CPU usage over the last 24 hours - hovering around 50% but seeing more spikes.

CPU usage over the last 24 hours - hovering around 50% but seeing more spikes.

Network traffic over the last 24 hours - given we're offloading photos to an external server I expected this to be higher than last time.

Network traffic over the last 24 hours - given we're offloading photos to an external server I expected this to be higher than last time.

Used storage space on the server

Used storage space on the server

Funding

Finally, I need to approach a topic I've been hesitant to approach since Day 1. There are a couple of improvements I would like to make to Lemmy.zip, but these have a resource implication and I can't guarantee funding out of my own pocket if these were to be implemented. I'd like to take regular backups of the server in the most painless way possible, but this comes with a 20% additional cost to the server. I'd like to look at switching to a dedicated server for better performance, but the costs here far exceed the cost of running on a VPS.

So, to be as transparent and open as possible, I've set up a funding page on a website dedicated to being open and transparent.

https://opencollective.com/lemmyzip

This it totally 100% optional, and the only way I have to say thank you is to created a dedicated thread somewhere and make sure your name goes it in (assuming you want it to).

The open collective site allows me to be as transparent as possible by adding expenses to the site, so you can exactly what any raised money is going on (it will only ever be spent on the site, that is a promise).

I hope this is OK with the community, as I genuinely feel torn about it. I can only promise I'm in with Lemmy.zip for the long run.

I don't plan to do these weekly as I don't imagine there will be much more content to add to these. Hopefully the server development stuff will cool down for a bit and I'll create these as and when something happens. Unless you all want a small weekly thread with server performance stuff in? Just let me know.

Thanks,

Demigodrick.

Interestingly, Finland has overtaken USA over the last 24 hours, however over the last week USA has smashed it with over 900k requests, and France in second place with almost 500k requests!

Interestingly, Finland has overtaken USA over the last 24 hours, however over the last week USA has smashed it with over 900k requests, and France in second place with almost 500k requests!

The red spike shows the bounce rate, which correlates with the spike in sent emails.

The red spike shows the bounce rate, which correlates with the spike in sent emails.

Requests through cloudflare since enabling it

Requests through cloudflare since enabling it Bandwidth usage & savings via caching

Bandwidth usage & savings via caching

Traffic per region over the last 24 hours (US has pulled ahead again, France almost overtook them!)

Traffic per region over the last 24 hours (US has pulled ahead again, France almost overtook them!) CPU usage over the last 24 hours - hovering around 50% but seeing more spikes.

CPU usage over the last 24 hours - hovering around 50% but seeing more spikes. Network traffic over the last 24 hours - given we're offloading photos to an external server I expected this to be higher than last time.

Network traffic over the last 24 hours - given we're offloading photos to an external server I expected this to be higher than last time. Used storage space on the server

Used storage space on the server