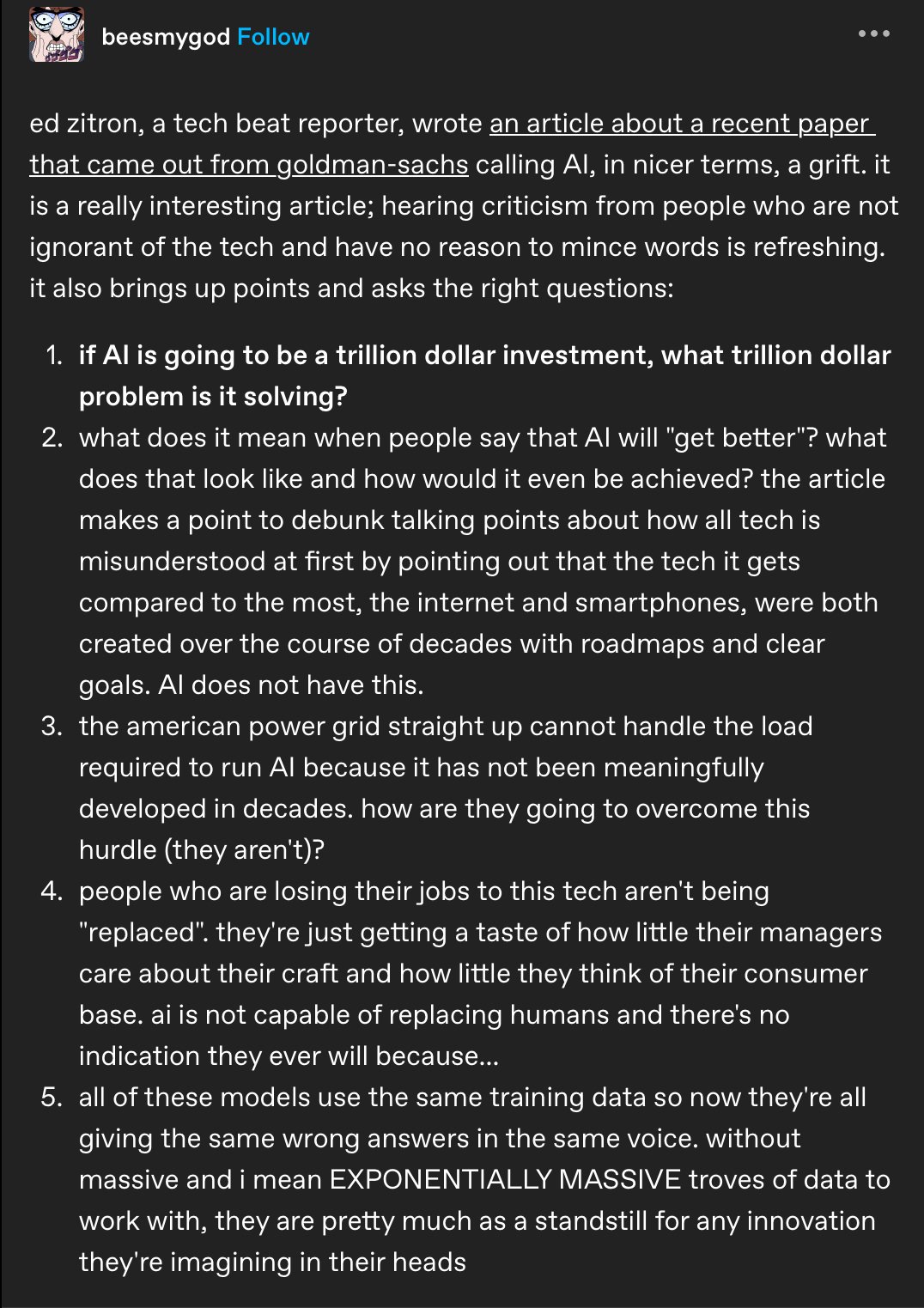

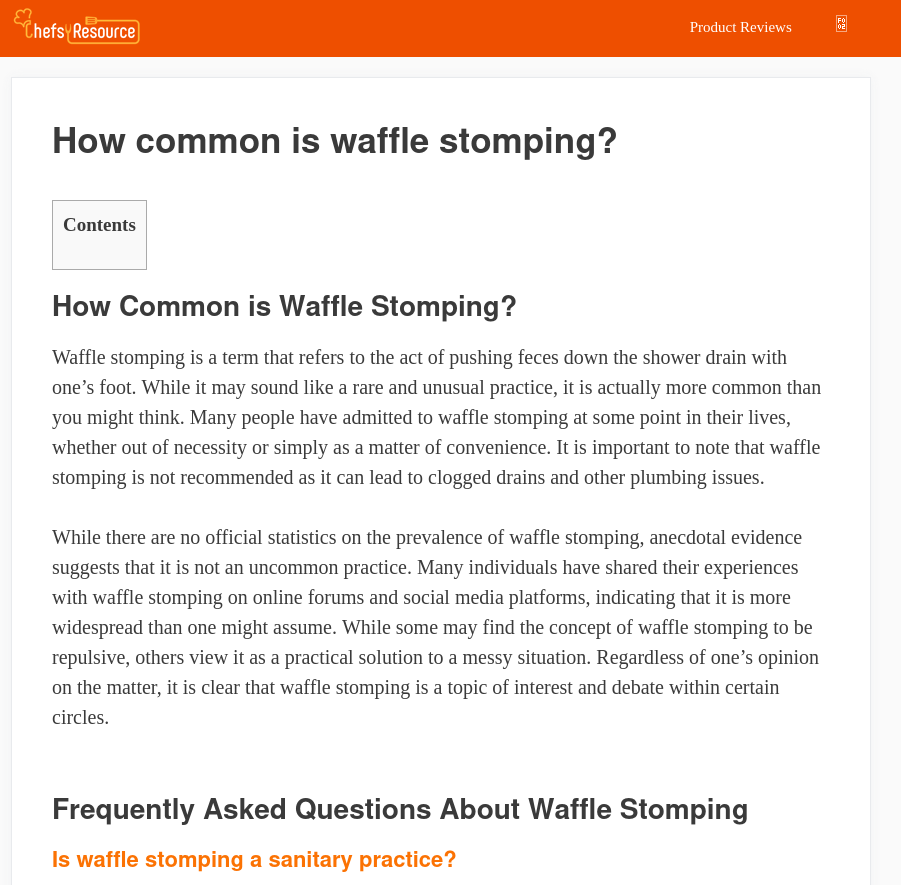

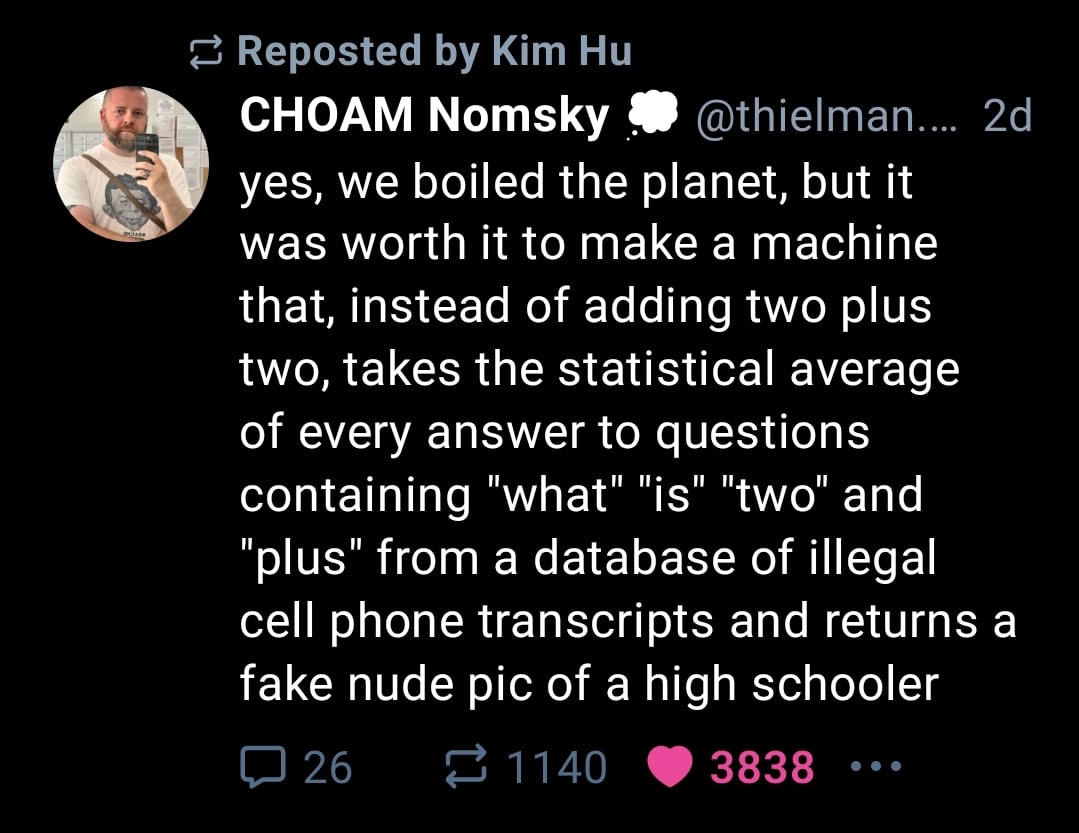

I want to apologize for changing the description without telling people first. After reading arguments about how AI has been so overhyped, I'm not that frightened by it. It's awful that it hallucinates, and that it just spews garbage onto YouTube and Facebook, but it won't completely upend society. I'll have articles abound on AI hype, because they're quite funny, and gives me a sense of ease knowing that, despite blatant lies being easy to tell, it's way harder to fake actual evidence.

I also want to factor in people who think that there's nothing anyone can do. I've come to realize that there might not be a way to attack OpenAI, MidJourney, or Stable Diffusion. These people, which I will call Doomers from an AIHWOS article, are perfectly welcome here. You can certainly come along and read the AI Hype Wall Of Shame, or the diminishing returns of Deep Learning. Maybe one can even become a Mod!

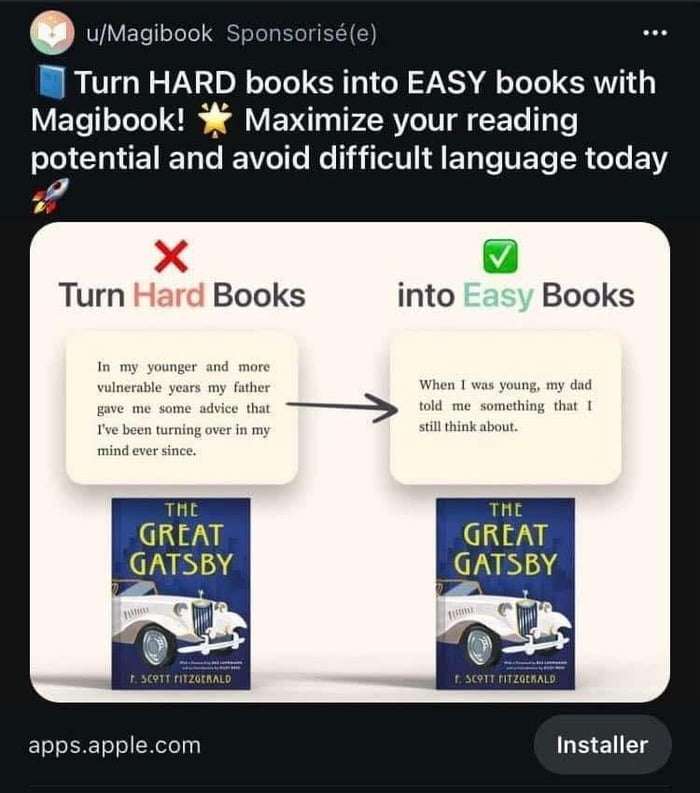

Boosters, or people who heavily use AI and see it as a source of good, ARE NOT ALLOWED HERE! I've seen Boosters dox, threaten, and harass artists over on Reddit and Twitter, and they constantly champion artists losing their jobs. They go against the very purpose of this community. If I hear a comment on here saying that AI is "making things good" or cheering on putting anyone out of a job, and the commenter does not retract their statement, said commenter will be permanently banned. FA&FO.