Whilst going through MAIHT3K's backlog, I ended up running across a neat little article theorising on the possible aftermath which left me wondering precisely what the main "residue", so to speak, would be.

The TL;DR:

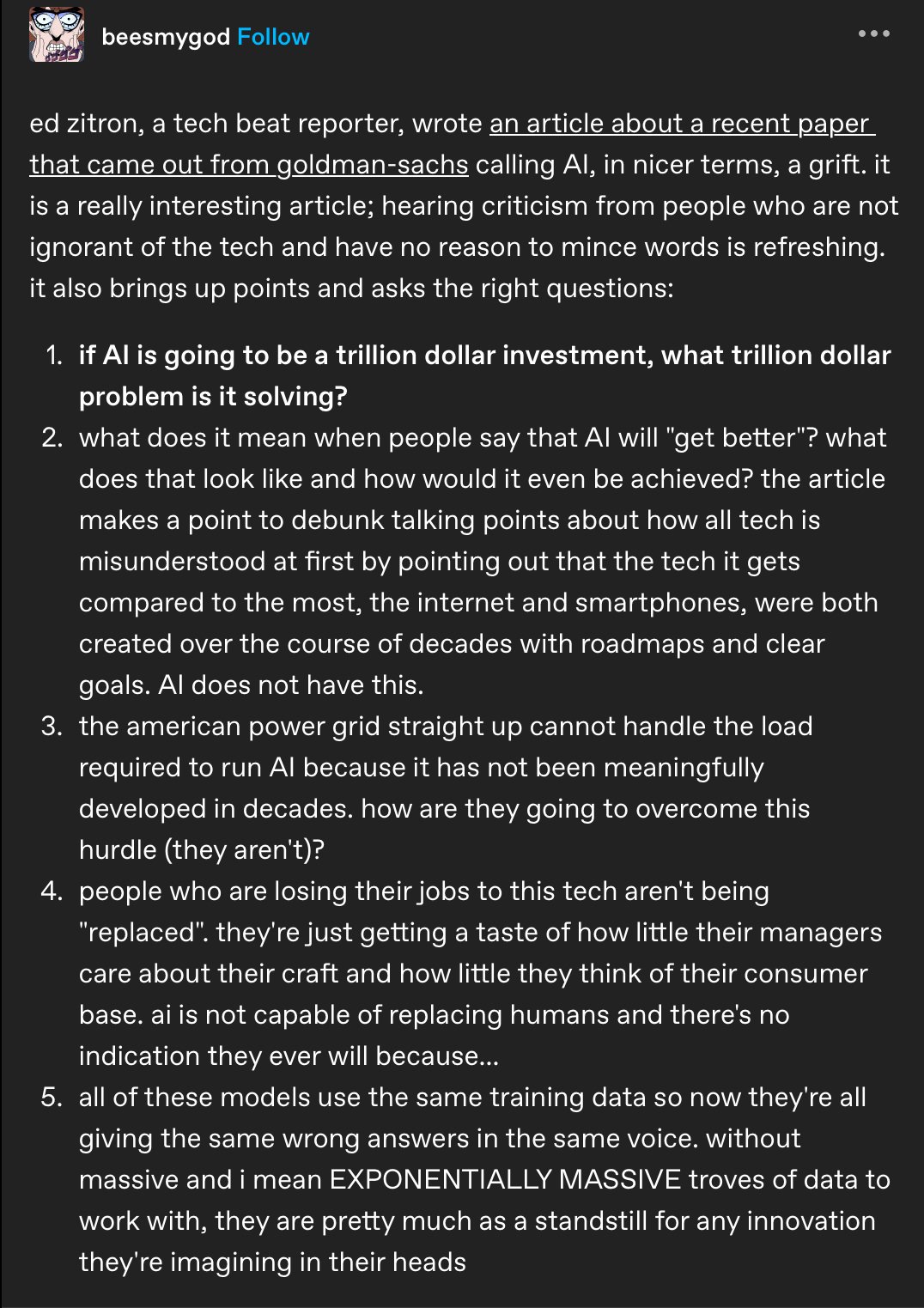

To cut a long story far too short, Alex, the writer, theorised the bubble would leave a "sticky residue" in the aftermath, "coating creative industries with a thick, sooty grime of an industry which grew expansively, without pausing to think about who would be caught in the blast radius" and killing or imperilling a lot of artists' jobs in the process - all whilst producing metric assloads of emissions and pushing humanity closer to the apocalypse.

My Thoughts

Personally, whilst I can see Alex's point, I think the main residue from this bubble is going to be large-scale resentment of the tech industry, for three main reasons:

- AI Is Shafting Everyone

Its not just artists who have been pissed off at AI fucking up their jobs, whether freelance or corporate - as Upwork, of all places, has noted in their research, pretty much anyone working right now is getting the shaft:

-

Nearly half (47%) of workers using AI say they have no idea how to achieve the productivity gains their employers expect

-

Over three in four (77%) say AI tools have decreased their productivity and added to their workload in at least one way

-

Seventy-one percent are burned out and nearly two-thirds (65%) report struggling with increasing employer demands

-

Women (74%) report feeling more burned out than do men (68%)

-

1 in 3 employees say they will likely quit their jobs in the next six months because they are burned out or overworked (emphasis mine)

Baldur Bjarnason put it better than me when commenting on these results:

It’s quite unusual for a study like this on a new office tool, roughly two years after that tool—ChatGPT—exploded into people’s workplaces, to return such a resoundingly negative sentiment.

But it fits with the studies on the actual functionality of said tool: the incredibly common and hard to fix errors, the biases, the general low quality of the output, and the often stated expectation from management that it’s a magic fix for the organisational catastrophe that is the mass layoff fad.

Marketing-funded research of the kind that Upwork does usually prevents these kind of results by finessing the questions. They simply do not directly ask questions that might have answers they don’t like.

That they didn’t this time means they really, really did believe that “AI” is a magic productivity tool and weren’t prepared for even the possibility that it might be harmful.

Speaking of the general low-quality output:

- The AI Slop-Nami

The Internet has been flooded with AI-generated garbage. Fucking FLOODED.

Doesn't matter where you go - Google, DeviantArt, Amazon, Facebook, Etsy, Instagram, YouTube, Sports Illustrated, fucking 99% of the Internet is polluted with it.

Unsurprisingly, this utter flood of unfiltered unmitigated endless trash has sent AI's public perception straight down the fucking toilet, to the point of spawning an entire counter-movement against the fucking thing.

Whether it be Glaze and Nightshade directly sabotaging datasets, "Made with Human Intelligence" and "Not By AI" badges proudly proclaiming human-done production or Cara blowing up by offering a safe harbour from AI, its clear there's a lot of people out there who want abso-fucking-lutely nothing to do with AI in any sense of the word as a result of this slop-nami.

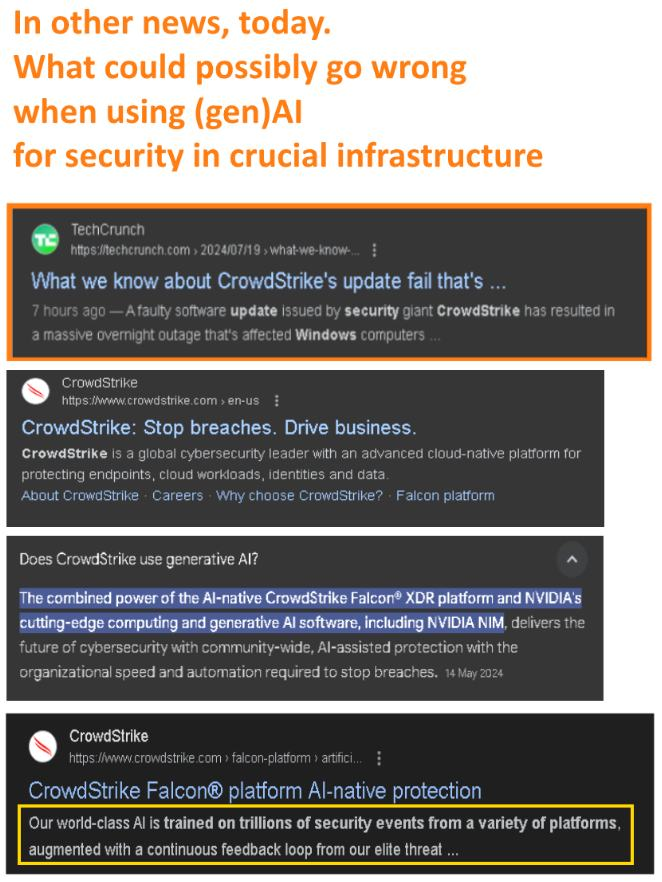

- The Monstrous Assholes In AI

On top of this little slop-nami, those leading the charge of this bubble have been generally godawful human beings. Here's a quick highlight reel:

I'm definitely missing a lot, but I think this sampler gives you a good gist of the kind of soulless ghouls who have been forcing this entire fucking AI bubble upon us all.

Eau de Tech Asshole

There are many things I can't say for sure about the AI bubble - when it will burst, how long and harsh the next AI/tech winter will be, what new tech bubble will pop up in its place (if any), etcetera.

One thing I feel I can say for sure, however, is that the AI bubble and its myriad harms will leave a lasting stigma on the tech industry once it finally bursts.

Already, it seems AI has a pretty hefty stigma around it - as Baldur Bjaranason noted when talking about when discussing AI's sentiment disconnect between tech and the public:

To many, “AI” seems to have become a tech asshole signifier: the “tech asshole” is a person who works in tech, only cares about bullshit tech trends, and doesn’t care about the larger consequences of their work or their industry. Or, even worse, aspires to become a person who gets rich from working in a harmful industry.

For example, my sister helps manage a book store as a day job. They hire a lot of teenagers as summer employees and at least those teens use “he’s a big fan of AI” as a red flag. (Obviously a book store is a biased sample. The ones that seek out a book store summer job are generally going to be good kids.)

I don’t think I’ve experienced a sentiment disconnect this massive in tech before, even during the dot-com bubble.

On another front, there's the cultural reevaluation of the Luddites - once brushed off as naught but rejectors of progress, they are now coming to be viewed as folk heroes in a sense, fighting against misuse of technology to disempower and oppress, rather than technology as a whole.

There's also the rather recent SAG-AFTRA strike which kicked off just under a year after the previous one, and was started for similar reasons - to protect those working in the games industry from being shafted by AI like so many other people.

With how the tech industry was responsible for creating this bubble at every stage - research, development, deployment, the whole nine yards - it is all but guaranteed they will shoulder the blame for all that its unleashed. Whatever happens after this bubble, I expect hefty scrutiny and distrust of the tech industry for a long, long time after this.

To quote @datarama, "the AI industry has made tech synonymous with “monstrous assholes” in a non-trivial chunk of public consciousness" - and that chunk is not going to forget any time soon.