me: clicks all the traffic lights

<wrong!>

This is a most excellent place for technology news and articles.

me: clicks all the traffic lights

<wrong!>

I hate that captcha -- the Google captcha where a single image (like a picture of a street with traffic lights, bikes, buses, etc) is divided up -- it is the worst one by far.

I've always thought it was intentional so that humans could train the edge detection of the machine vision algorithms.

It is. The actual test for humans there isn't the fact that you clicked the right squares, it's how your mouse jitters or how your finger moves a bit when you tap.

What really stresses me out is the question of whether a human on a motorcycle becomes part of the motorcycle.

Of course not. But what about that millimeter of tire? Or the tenth of the rearview mirror?

Of course, but CAPTCHA says no, do it again, to hell with those bicycle handlebars!

You can just click a couple squares and hit ok. It doesn’t have to be right.

So we just invert the logic now, right?

Make the captcha impossibly hard to get right for humans but doable for bots, and let people in if they fail the test.

I haven't been able to solve CAPTHCAs in years.

I suggest you get an appointment with your local blade runner.

Let me tell you about my mother

Are you a robot?

I guess not

Ditching CAPTCHA systems because they don't work any more is kind of obvious. I'm more interested on what to replace them with; as in, what to use to prevent access of bots to a given resource and/or functionality.

In some cases we could use human connections to do that for us; that's basically what db0's Fediseer does, by creating a chain of groups of users (instances) guaranteeing each other.

Proof of work. This won't stop all bots from getting into the system, but it will prevent large numbers of them from doing so.

Proof of work could be easily combined with this, if the wasted computational cost is deemed necessary/worthy. (At least it's wasted CPU cost, instead of wasted human time like captcha.)

Tor has already implemented proof of work to protect onion services and from everything I can tell it has definitely helped. It's a slight inconvenience for users but it becomes very expensive very quickly for bot farms.

What prevents the adversaries from guafanteeing their bots that then guarantee more bots?

The chain of trust being formed. If some adversary does slip past the radar, and gets guaranteed, once you revoke their access you're revoking the access of everyone else guaranteed by that person, by their guarantees, by their guarantees' guarantees, etc. recursively.

For example. Let's say that Alice is confirmed human (as you need to start somewhere, right?). Alice guarantees Bob and Charlie, saying "they're humans, let them in!". Bob is a good user and guarantees Dan and Ed. Now all five have access to the resource.

But let's say that Charlie is an adversary. She uses the system to guarantee a bunch of bots. And you detect bots in your network. They all backtrack to Charlie; so once you revoke access to Charlie, everyone else that she guaranteed loses access to the network. And their guarantees, etc. recursively.

If Charlie happened to also recruit a human, like Fran, Fran will also get orphaned like the bots. However Fran can simply ask someone else to be her guarantee.

[I'll edit this comment with a picture illustrating the process.]

EDIT: shitty infographic, behold!

Note that the Fediseer works in a simpler way, as each instance can only guarantee another instance (in this example I'm allowing multiple people to be guaranteed by the same person). However, the underlying reasoning is the same.

I feel like this could be abused by admins to create a system of social credit. An admin acting unethically could revoke access up the chain as punishment for being associated with people voicing unpopular opinions, for example.

Yeah kind of idiotic that the video kept saying that captchas are useless -- they're still preventing basic bots from filling forms. If you took them away, fraudsters wouldn't have to pay humans to solve them or use fancy bots any more, so bot traffic would increase

So what would be a good solution to this? What is something simple that bots are bad at but humans are good at it?

Knowing what we now know, the bots will instead just make convincingly wrong arguments which appear constructive on the surface.

I work in a related space. There is no good solution. Companies are quickly developing DRM that takes full control of your device to verify you're legit (think anticheat, but it's not called that). Android and iPhones already have it, Windows is coming with TPM and MacOS is coming soon too.

Edit: Fun fact, we actually know who is (beating the captchas). The problem is if we blocked them, they would figure out how we're detecting them and work around that. Then we'd just be blind to the size of the issue.

Edit2: Puzzle captchas around images are still a good way to beat 99% of commercial AIs due to how image recognition works (the text is extracted separately with a much more sophisticated model). But if I had to guess, image puzzles will be better solved by AI in a few years (if not sooner)

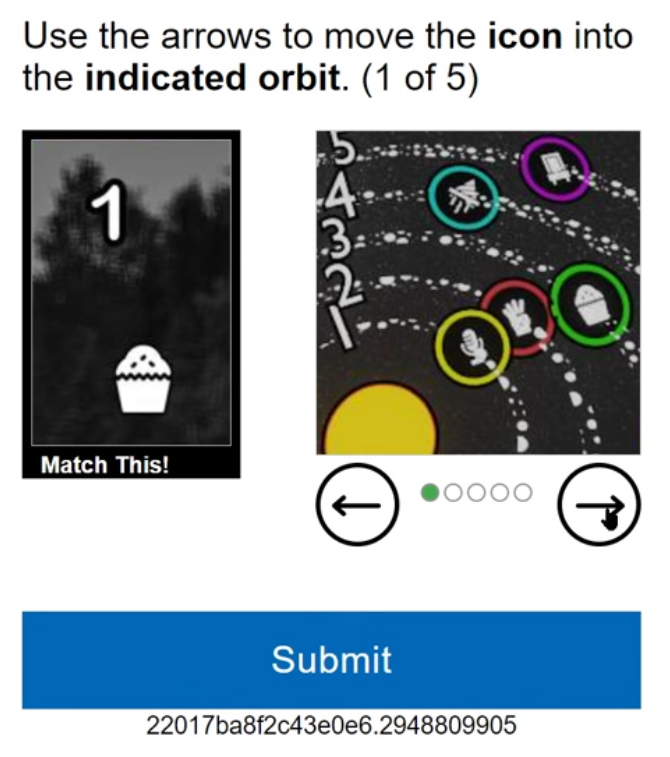

I love Microsoft’s email signup CAPTCHA:

Repeat ten times. Get one wrong, restart.

iPhones already have it

Private Access Tokens? Enabled by default in Settings > [your name] > Sign-In & Security > Automatic Verification. Neat that it works without us realizing it, but disconcerting nonetheless.

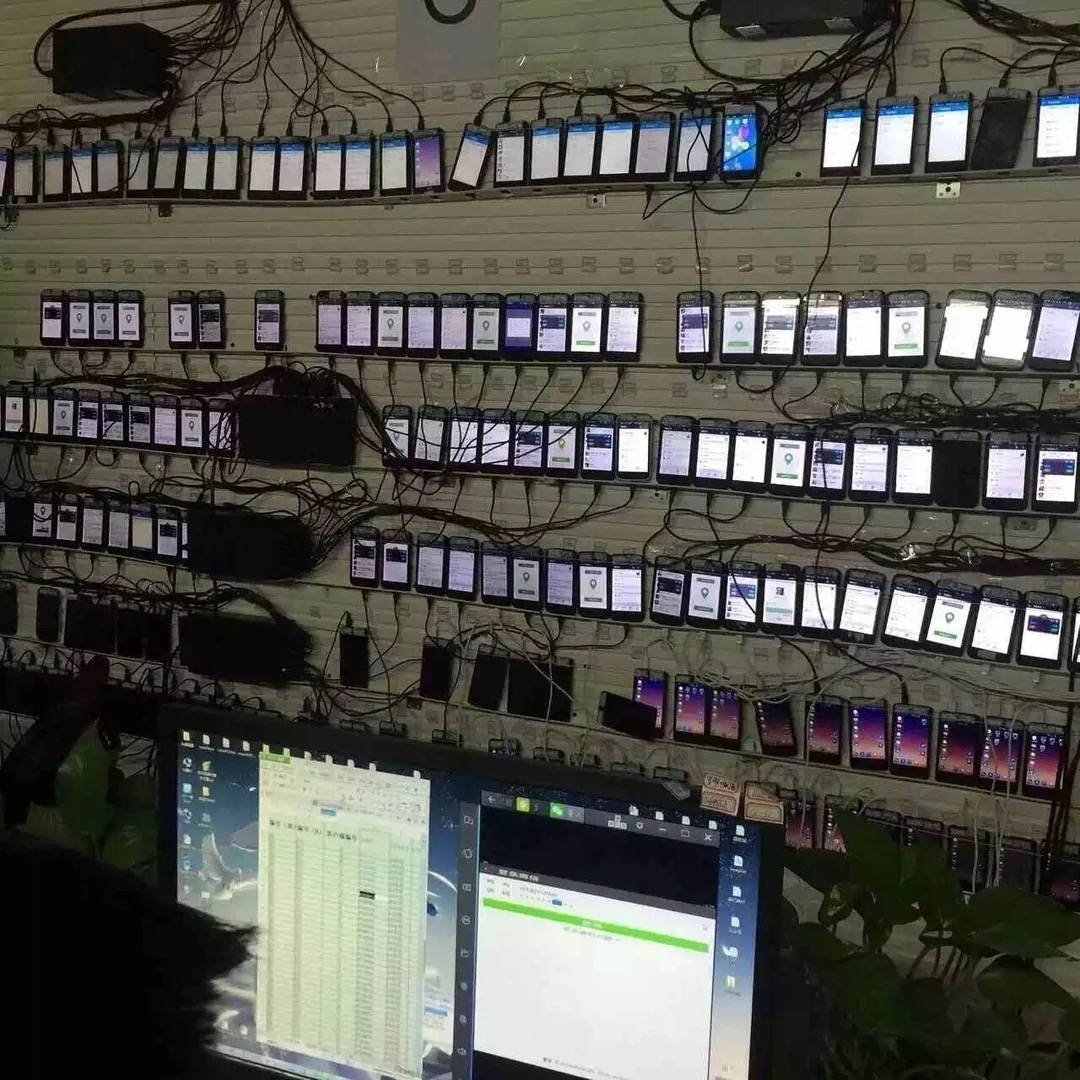

So, the spammers will need physical Android device farms…

More industry insight: walls of phones like this is how company's like Plaid operate for connecting to banks that don't have APIs.

Plaid is the backend for a lot of customer to buisness financial services, including H&R Block, Affirm, Robinhood, Coinbase, and a whole bunch more

Edit: just confirmed, they did this to pass rate limiting, not due to lack of API access. They also stopped 1-2 years ago

Oh my god. I lost my fucking mind at the microsoft one. You might aswell have them solve a PhD level theoretical physics question

Isn't the real security from how you and your browser act before and during the captcha? The point was to label the data with humans to make robots better at it. Any trivial/novel task is sufficient generally, right?

How the hell am I supposed to know which parts of that picture contain bicycles?

Hey, failing at being a human being while trying to highlight where the bicycle starts and end on the picture is my job! You won't take that away from me, you fucking robot!

They may take our creative writing, they make take our digital art creation, they may take our ability to feed ourselves and our families. Hell, they may even take every single creative outlet humans have and relegate us to menial work in service of our capitalist overlords. But they will never take away clicking on boxes of pictures of bicycles and crosswalks!

am I gonna need an AI to solve captchas now?

cause they've gotten so patently stupidly ridiculous that I cant even solve them as a somewhat barely functional biological intelligence.

If some sites only need me to click the one checkbox to prove I am a human, why aren't ALL sites using this method?!

when you have to click once, means they have been gathering all your actions up to that point, and for sure you are human. If you get asked to click images, means they don't have enough information yet, or you failed some security step (wrong password) and the site told captcha to be extra sure

Of course they can! Humans have been training them on this task for 20 years.

There are two Brewster Rockit strips that are applicable here.