this post was submitted on 22 Oct 2024

558 points (99.6% liked)

RPGMemes

10263 readers

176 users here now

Humor, jokes, memes about TTRPGs

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

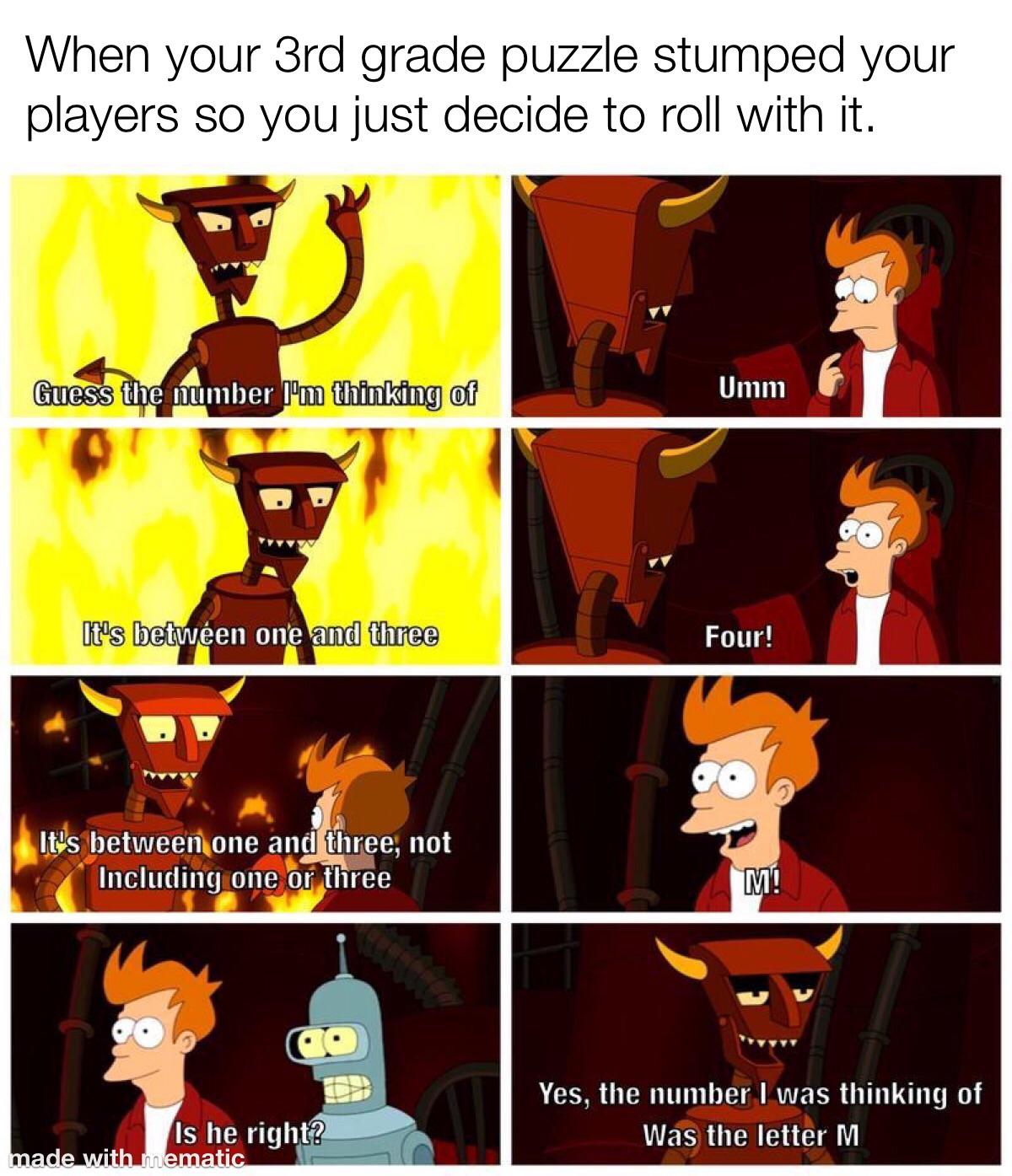

Had a puzzle thrown at me by my DM this weekend.

He admitted it was written by ai. I did not guess correctly.

@zaph @Stamets ai just doesn't do those relations that well. It knows how riddles are supposed to look like, but I doubt it can do the mental leap between questions and answers

AI doesn't have a mind to do mental leaps, it only knows syntax. Just a form of syntax so, so advanced that it sometimes accidentally gets things factually correct. Sometimes.

It's more advanced than just syntax. It should be able to understand the double meanings behind riddles. Or at the very least, that books don't have scales, even if it doesn't understand that the scales that a piano has aren't the same as the ones a fish has.

It doesn't understand anything. It predicts a word based on previous words - this is why I called it syntax. If you imagine a huge and vastly complicated series of rules about how likely one word is to follow up to, say, 1000 others... That's an LLM.

It can predict that the word "scales" is unlikely to appear near "books". Do you understand what I mean now? Sorry, neural networks can't understand things. Can you make predictions based on what senses you received now?

Well given that an LLM produced the nonsense riddle above, obviously it cannot predict that. It can predict the structure of a riddle perfectly well, it can even get the rhyming right! But the extra layer of meaning involved in a riddle is beyond what LLMs are able to do at the moment. At least, all of them that I've seen - they all seem to fall flat with this level of abstraction.