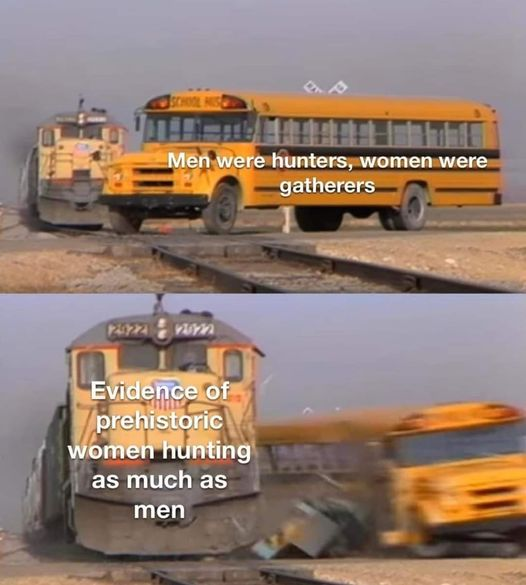

My SO has a theory that if the group of people lived in a harsh environment, ie. having to work for what you had with no guarantee of food or safety, etc, it was common for women to work just as much as men. Such a society needed all hands on deck, so to speak. But, when we start becoming "civilized", and things started getting made for us, (as opposed to an individual making it themselves.) Women and men start having diverging roles. Essentially, there's just not enough work, so womens role turns into raising the babies, to fill the time. Eventually, for whatever reason, "civilized" society just forgot about the hard times and assumes women have always been there just to raise babies.

Disclaimer: This is based on absolutely nothing. Maybe some random information that explain that women did "men" jobs too, once. Idk.