this post was submitted on 21 Oct 2024

518 points (98.3% liked)

Facepalm

2597 readers

422 users here now

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

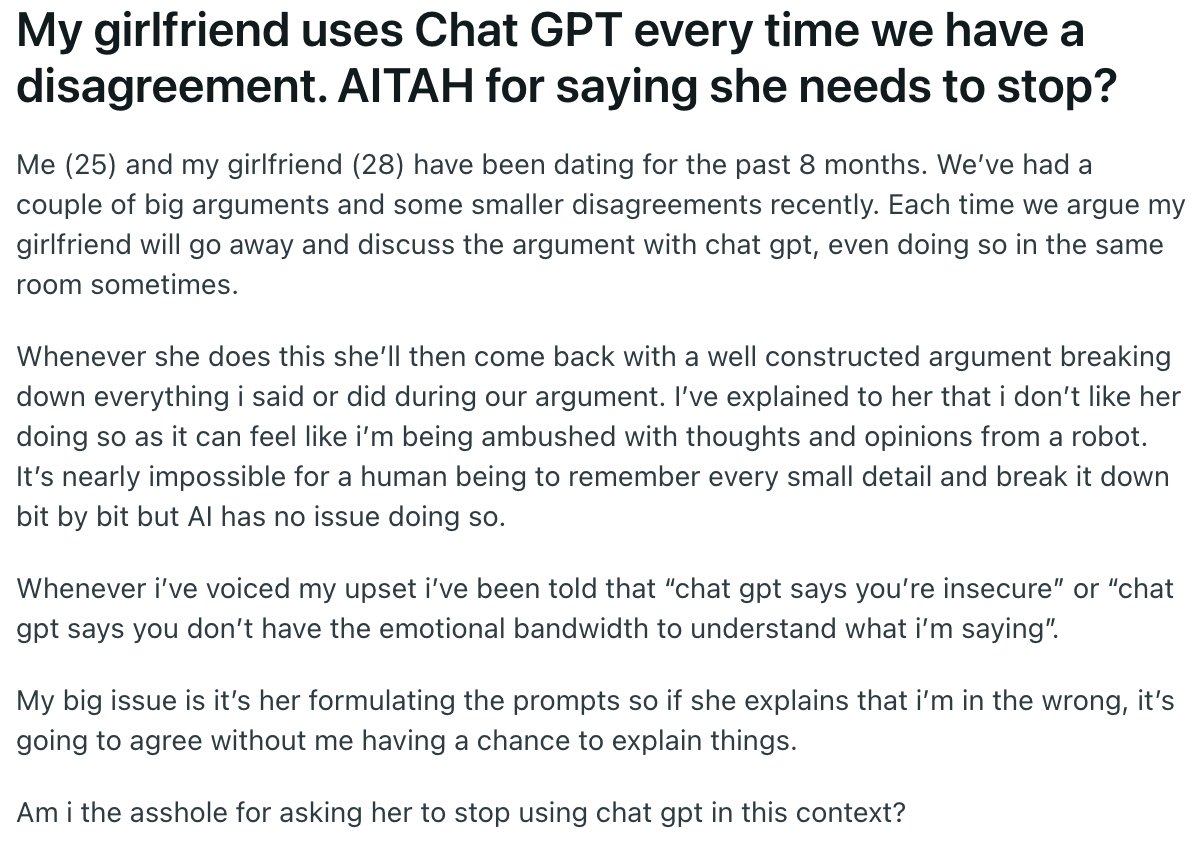

Ok, is this a thing now? I don’t think I’d want to be in what is essentially a relationship with chat GPT…

Yes... I know some people who rely exclusively on Chatgpt to meditate their arguments. Their reasoning is that it allows them to frame their thoughts and opinions in a non-accusatory way.

My opinion is that chatgpt is a sycophant that just tries to agree with everything you say. Garbage in, garbage out. I suppose if the argument is primarily emotionally driven, with minimal substance, then having Chatgpt be the mediator might be helpful.

Well, it literally is a yes man, it guesses what words are the most appropriate response to the words you said.

No… everything on AITA has always been fake bullshit

How would it be any different if she had been using Google? Just like...vibes?

The issue is that chatgpt's logic works backwards - they take the prompts as fact, then find sources to back up the things stated in the prompt. And additionally, chatgpt will frame the argument in a seemingly reasonable and mild tone so as to make the argument appear unbiased and balanced. It's like the entirety of r/relationshipadvice rolled into one useless, billion-dollar spambot

If someone isn't aware of the sycophant nature of chatgpt, it's easy to interpret the response as measured and reliable. When the topic of using chatgpt as relationship advice comes up (it happens concerningly often), I make a point to show that you can get chatgpt to agree with virtually anything you say, even in hypothetical cases where it's absurdly clear that the prompter is in the wrong. At least Google won't tell you that beating your wife is OK