this post was submitted on 07 Oct 2023

995 points (97.7% liked)

Technology

59341 readers

4931 users here now

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related content.

- Be excellent to each another!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, to ask if your bot can be added please contact us.

- Check for duplicates before posting, duplicates may be removed

Approved Bots

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

Luckily we've already begun developing tools to help detect AI Images with higher accuracy than a human curator.

Unluckily, they're also detecting human-made images as AI images.

I said "with higher accuracy than a human curator." You didn't really build upon that, no offence. You also didn't upvote despite literally repeating something that I said. You just like to take up space in people's inboxes? I'm trying not to be an asshole about it but I feel legitimate confusion about the purpose of your reply.

You said we've "begun developing" tools with higer accuracy, I said we're already using tools with a lower accuracy (higher false positive rate).

(as for the rest... sorry for any imprecision, and I feel like you might want to get some sleep)

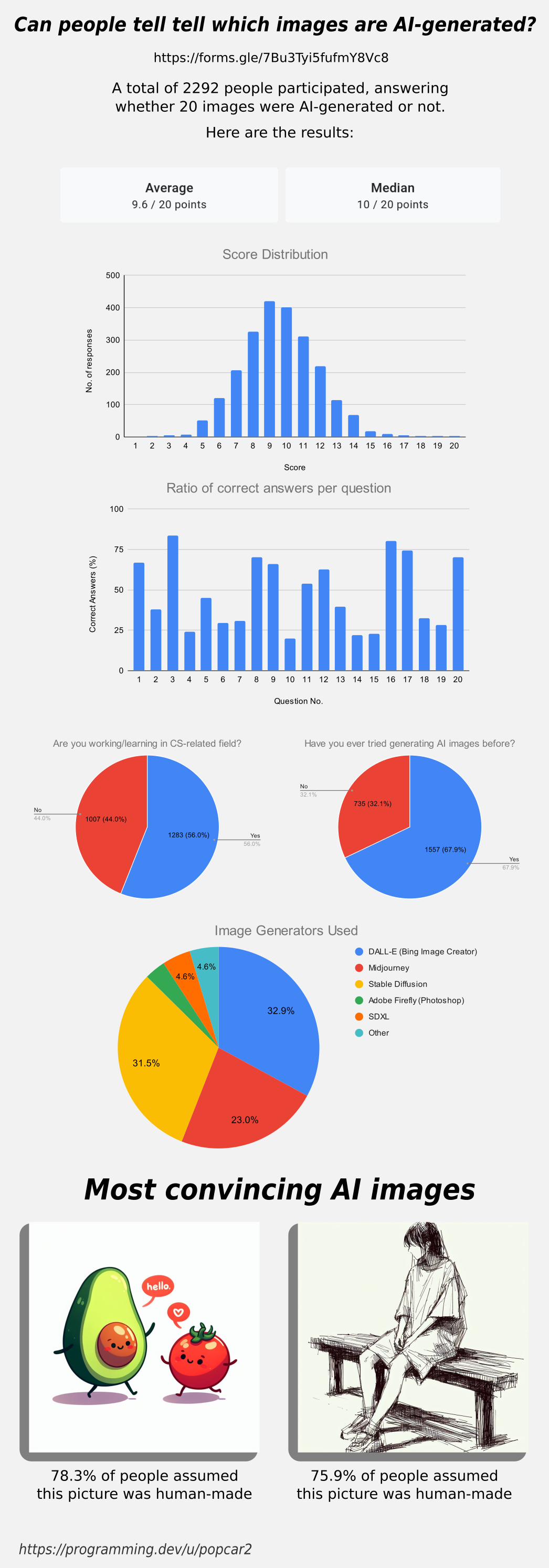

Commercially available AI Detection algorithms are averaging around 60% accuracy, that's already a 12% increase on the data shown in this study.

This isn't possible as of now, at least not reliably. Yes, you can tailor a model to one specific generative model, but because we have no reliable outlier detection (to train the "AI made detector"), a generative model can always be trained with the detector model incorporated in the training process. The generative model (or a new model only designed to perturb output of the "original" generative model) would then learn to create outliers to the outlier detector, effectively fooling the detector. An outlier is everything that pretends to be "normal" but isn't.

In short: as of now we have no way to effectively and reliably defend against adversarial examples. This implies, that we have no way to effectively and reliably detect AI generated content.

Please correct me if I'm wrong, I might be mixing up some things.

It already exists, the human accuracy was only 48% average in this study. It's really easy to beat.

I said "reliably", should have said "...and generally". You can, as I said, always tailor a detector model to a certain target model (generator). But the reliability of this defense builds upon the assumption, that the target model is static and doesn't change. This is has been a common error/mistake in AI research regarding defensive techniques against adversarial examples. And if you think about it, it's a very strong assumption, that doesn't make a lot of sense.

Again, learning the characteristics of one or several fixed models is trivial and gets us nowhere, because evasive techniques (e.g. finding 'adverserial examples against the detector' so to speak) can't be prevented as of know, to the best of my knowledge.

Edit: link to paper discussing problems of common defenses/attack scenario modelling https://proceedings.neurips.cc/paper/2020/hash/11f38f8ecd71867b42433548d1078e38-Abstract.html

With the direction you are forcing this conversation, away from practical examples and our current reality, the two of us are operating purely off hypotheticals. With that in mind, you could completely skip reading the rest of this comment and it won't impact your life in any way, shape, or form.

If you think about it, the changes in the models working off data from the internet would actually make the unchanging defensive model (and to be clear it's wrong to think that the AI based Defensive model would be static either) would make the defensive model more accurate over time because the less than 99% accurate generating models would eventually feed back into themselves dropping efficiency over time. This is especially true when models are allowed to learn and grow off of user prompts because users are likely to resubmit the results or make generative API Requests in repeating sequence to make shifting visuals for use in things like song visualisers or short video clips.