cross-posted from: https://sh.itjust.works/post/998307

Hi everyone. I wanted to share some Lemmy-related activism I’ve been up to. I got really interested in the apparent surge of bot accounts that happened in June. Recently, I was able to play a small part in removing some of them. Hopefully by getting the word out we can ensure Lemmy is a place for actual human users and not legions of spam bots.

First some background. This won't be new to many of you, but I'll include it anyway. During the week of June 18 to June 25, as the Reddit migration to Lemmy was in full swing, there was a surge of suspicious account creation on Lemmy instances that had open registration and no captcha or email verification. Hundreds of thousands of accounts appeared and then sat inactive. We can only guess what they’re for, but I assume they are being planted for future malicious use (spamming ads, subversive electioneering, influencing upvotes to drive content to our front pages, etc.)

If you look at the stats on The Federation you might notice that even the shape of the Total Users graphs are the same across many instances. User numbers ramped up on June 18, grew almost linearly throughout the week, and peaked on June 24. (I’m puzzled by the slight drop at the end. I assume it's due to some smoothing or rate-sensitive averaging that The Federation uses for the graphs?)

Here are total user graphs for a few representative instances showing the typical shape:

Clearly this is suspicious, and I wasn’t the only one to notice. Lemmy.ninja documented how they discovered and removed suspicious accounts from this time period: (https://lemmy.ninja/post/30492). Several other posts detailed how admins were trying to purge suspicious accounts. From June 24 to June 30 The Federation showed a drop in the total number of Lemmy users from 1,822,313 to 1,589,412. That’s 232,901 suspicious accounts removed! Great success! Right?

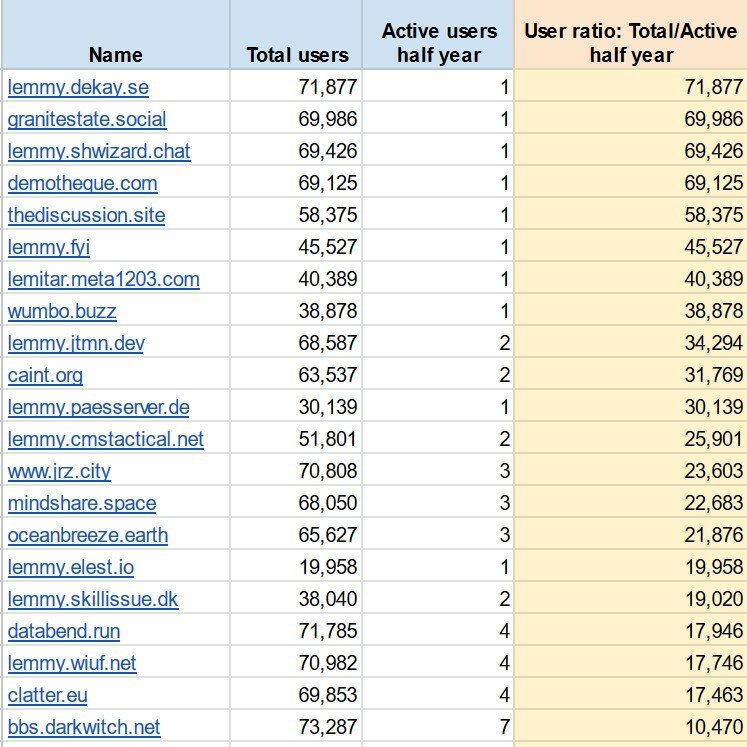

Well, no, not yet. There are still dozens of instances with wildly suspicious user numbers. I took data from The Federation and compared total users to active users on all listed instances. The instances in the screenshot below collectively have 1.22 million accounts but only 46 active users. These look like small self-hosted instances that have been infected by swarms of bot accounts.

As of this writing The Federation shows approximately 1.9 million total Lemmy accounts. That means the majority of all Lemmy accounts are sitting dormant on these instances, potentially to be used for future abuse.

This bothers me. I want Lemmy to be a place where actual humans interact. I don’t want it to become another cesspool of spam bots and manipulative shenanigans. The internet has enough places like that already.

So, after stewing on it for a few days, I decided to do something. I started messaging admins at some of these instances, pointing out their odd account numbers and referencing the lemmy.ninja post above. I suggested they consider removing the suspicious accounts. Then I waited.

And they responded! Some admins were simply unaware of their inflated user counts. Some had noticed but assumed it was a bug causing Lemmy to report an incorrect number. Others weren’t sure how to purge the suspicious accounts without nuking their instances and starting over. In any case, several instance admins checked their databases, agreed the accounts were suspicious, and managed to delete them. I’m told that the lemmy.ninja post was very helpful.

Check out these early results!

Awesome! Another 144k suspicious accounts are gone. A few other admins have said they are working on doing the same on their instances. I plan to message the admins at all the instances where the total accounts to active users ratio is above 10,000. Maybe, just maybe, scrubbing these suspected bot accounts will reduce future abuse and prevent this place from becoming the next internet cesspool.

That’s all for now. Thanks for reading! Also, special thanks to the following people:

@[email protected] for your helpful post!

@[email protected], @[email protected], and @[email protected] for being so quick to take action on your instances!

How we know if some reasonable % of those accounts aew not just some lurkers who were just trying out the Lemmy but then did nothing with the account? Couple of years ago I dis the same, registered an account and didnt do much with it and kept using reddit.

(Disclaimer: I haven't read into that referenced article by ninja at all, maybe it already says something related)

For one, it may be possible to filter accounts that were created but actually never used to log on, within a week or two of creation - those could go without much harm done IMO.

And/or, you could message such accounts and ask them for email verification, which would need to be completed before they can interact in any way (posting, commenting, voting). That latter one is quite probably currently not directly supported by the Lemmy software, but could be patched in when the need arises.

You remeber how we grumbled when we were required by reddit to input our emails for verification?

I dobt those users will answer. Very few people want to give their emails and was happy that providing emails on lemmy was optional.

If they haven't even logged in to their account once then any (highly unlikely) false positives of real accounts getting deleted will be an acceptable loss.

I agree, if the user did not even provide an email, then it is likely that they know and accept or future possible loss of their account, since they cannot recover an account access without email.

Agreed

But they happily give it to Threads, no...?

Yes, I know, I'm being somewhat more provocative here than necessary.

More down to reality, thousands of accounts being registered within seconds, possibly all from the same IP, aren't ordinary user activity. And quite feasible to filter for.

Heck, you could even ask for the eMail and offer some "or, if you rather wouldn't, you could..." thing that basically serves as a CAPTCHA.

yes, I do agree. some people are selective in that regard, they don't want their "privacy" compromised, yet when on another platform they have no qualms about giving out their personal details.

I think it is an acceptable loss on the fediverse side if we delete these accounts that were made and have had no activity in, say, a week or so.

I was just commenting that emailing them and waiting for a reply is a waste of time. since the account has no activity, there is nothing to lose if it is deleted. no posts, no comments, no bookmarks, no follow. The account is akin to an empty bag.

I feel like providing an email is a much better alternative to having your account blanket banned due to creating it at a certain time.

If not emails maybe just being stricter when checking to see if the one creating an account is human?

This is my concern. I’m a Reddit refugee but I only want to reply to posts where I can provide technical knowledge. (Though I’ll happily upvote, downvote etc). Is lurking on going to get people banned?

Yeah that worries me. Was definitely just a lurker at first while I got used to the place. They didn't ban me so I'm still here. Never had issues making or keeping accounts before but here lately it's been such a hassle. Instagram has taken to banning me immediately, I had to make 10 accounts in a row before they didn't immediately ban one.

Worst part is they don't tell you why and you can't fight it. I feel like it's bc I use a VPN then lurk but idk. never another account there, not worth it. Facebook did the same thing a few times until something finally clicked in their system and they quit deleting them.

Tiktok has apparently done the same damn thing as I went to use my account the other day just to be informed it was deleted or banned I don't remember.

Think it said to check my email, which I frequently do and there was no mail saying my account was going to be deleted much less why.

It's ridiculous and rather than making a new account I just exit the site now.

So deleting them all? I don't really agree (though I hope they were indeed bots!)

I think what would make more sense is to be more strict when users actually create the accounts in the first place. I feel like those "select all the images with bikes" are ridiculous, I'm human and half the time Google or wherever says I didn't get it right but there HAS to be a simpler, better way.

I get hit with those all of the time, I think everyone with a VPN does so a few additional steps wouldn't be such a big deal and imo would be far less annoying than logging in and realizing your account was blanket banned without explanation just bc you happened to create it while bot accounts were being made.

Would help even more if users had a string bot report feature where it was taken seriously. It could be pretty simple. See a bot? Report it.

The reviewer of the report, instead of just blanket banning should maybe contact the user first to check and see if they're human. As far as user retention it's better than just banning every bot we think we have.