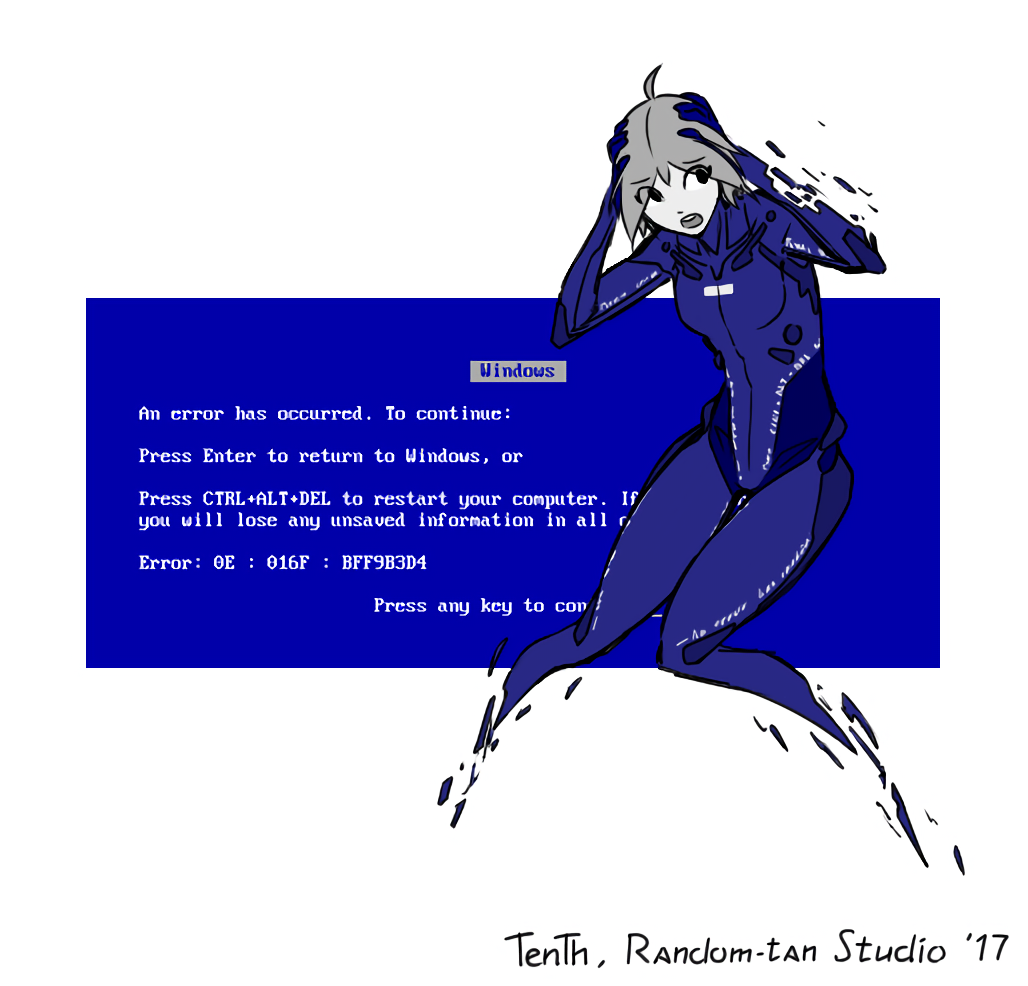

Yes, some Linux distros use blue kernel-panic screens too but I'm tagging the post [Windows] because that's the "franchise" where the "character" debuted.

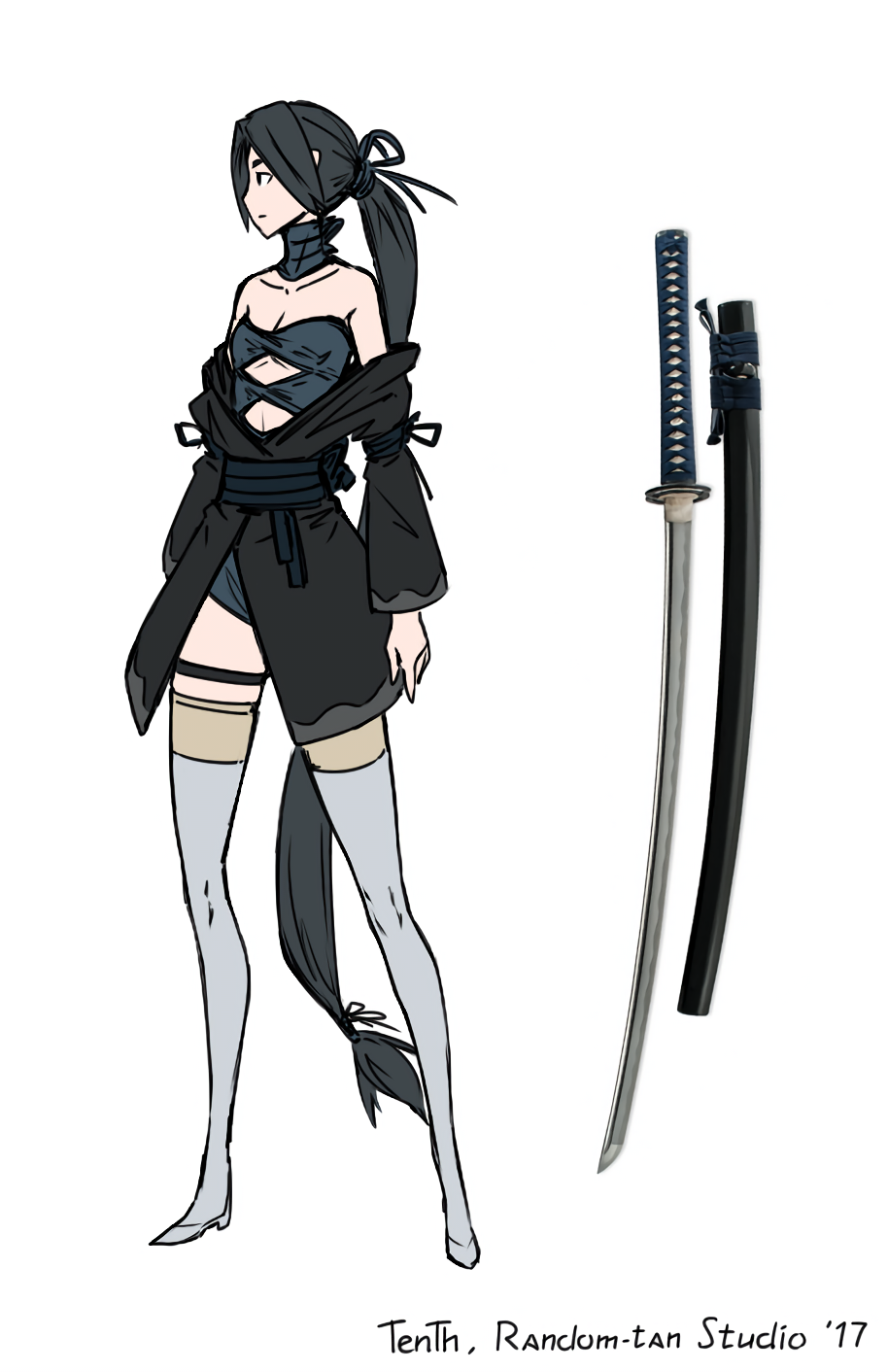

Artist: Onion-Oni aka TenTh from Random-tan Studio

Original post: #Humanization 11 on Tapas (warning: JS-heavy site)

Upscaled by waifu2x (model: upconv_7_anime_style_art_rgb). Original

Unlike photos, upscaling digital art with a well-trained algorithm will likely have little to no undesirable effect. Why? Well, the drawing originated as a series of brush strokes, fill areas, gradients etc. which could be represented in a vector format but are instead rendered on a pixel canvas. As long as no feature is smaller than 2 pixels, the Nyquist-Shannon sampling theorem effectively says that the original vector image can therefore be reconstructed losslessly. (This is not a fully accurate explanation, in practice algorithms need more pixels to make a good guess, especially if compression artifacts are present.) Suppose I gave you a low-res image of the flag of South Korea 🇰🇷 and asked you to manually upscale it for printing. Knowing that the flag has no small features so there is no need to guess for detail (this assumption does not hold for photos), you could redraw it with vector shapes that use the same colors and recreate every stroke and arc in the image, and then render them at an arbitrarily high resolution. AI upscalers trained on drawings somewhat imitate this process - not adding detail, just trying to represent the original with more pixels so that it loooks sharp on an HD screen. However, the original images are so low-res that artifacts are basically inevitable, which is why a link to the original is provided.

Why? Passenger trains and subways are already very safe thanks to remote control & monitoring systems, Deadman's switches etc. Many urban rail sstems don't use drivers at all! Is there a subway accident from the past 20 years that could have been prevented with an extra driver?