I am probably unqualified to speak about this, as I am using an RX 550 low profile and a 768P monitor and almost never play newer titles, but I want to kickstart a discussion, so hear me out.

The push for more realistic graphics was ongoing for longer than most of us can remember, and it made sense for most of its lifespan, as anyone who looked at an older game can confirm - I am a person who has fun making fun of weird looking 3D people.

But I feel games' graphics have reached the point of diminishing returns, AAA studios of today spend millions of dollars just to match the graphics' level of their previous titles - often sacrificing other, more important things on the way, and that people are unnecessarily spending lots of money on electricity consuming heat generating GPUs.

I understand getting an expensive GPU for high resolution, high refresh rate gaming but for 1080P? you shouldn't need anything more powerful than a 1080 TI for years. I think game studios should just slow down their graphical improvements, as they are unnecessary - in my opinion - and just prevent people with lower end systems from enjoying games, and who knows, maybe we will start seeing 50 watt gaming GPUs being viable and capable of running games at medium/high settings, going for cheap - even iGPUs render good graphics now.

TLDR: why pay for more and hurt the environment with higher power consumption when what we have is enough - and possibly overkill.

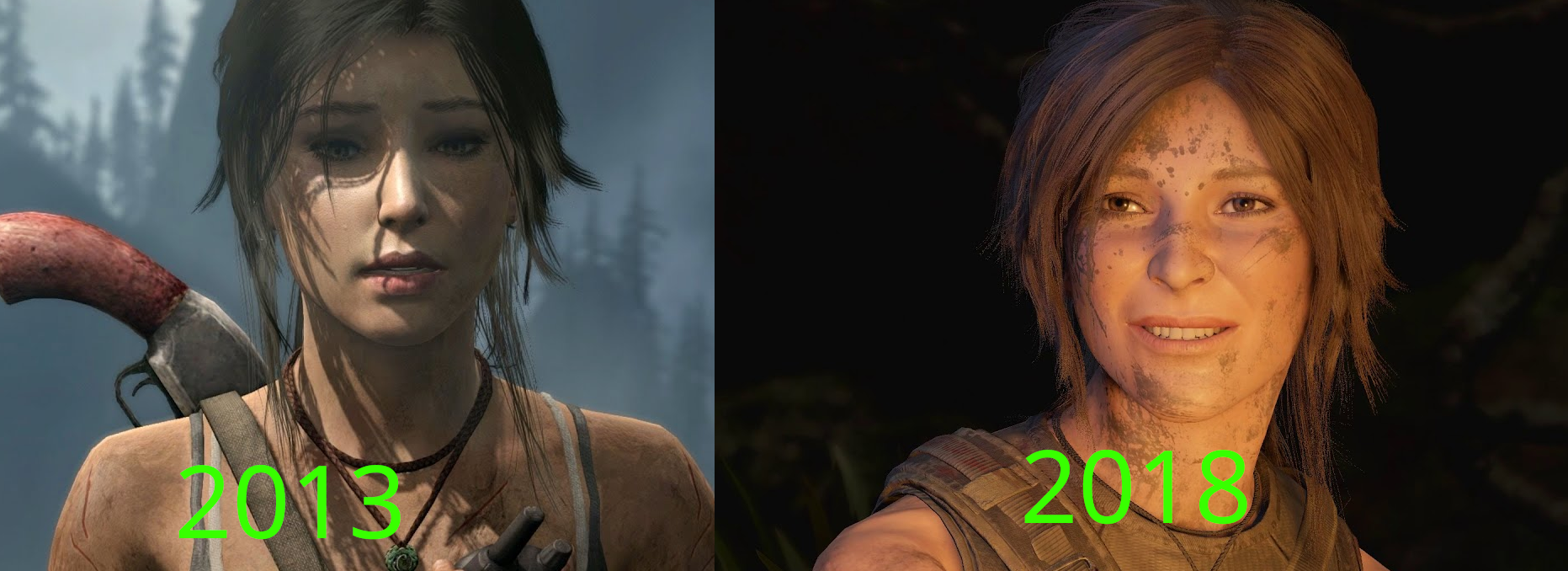

Note: it would be insane of me to claim that there is not a big difference between both pictures - Tomb Raider 2013 Vs Shadow of the Tomb raider 2018 - but can you really call either of them bad, especially the right picture (5 years old)?

Note 2: this is not much more that a discussion starter that is unlikely to evolve into something larger.

Is it diminishing returns? Yes, of course.

Is it taxing on your GPU? Absolutely.

But, consider Control.

Control is a game made by the people who made Alan Wake. It's a fun AAA title that is better than it has any right to be. Packed with content. No microtransactions. It has it all. The only reason it's as good as it is? Nvidia paid them a shitload of money to put raytracing in their game to advertise the new (at the time) 20 series cards. Control made money before it even released thanks to GPU manufacturers.

Would the game be as good if it didn't have raytracing? Well, technically yes. You can play it without raytracing and it plays the same. But it wouldn't be as good if Nvidia hadn't paid them, and that means raytracing has to be included.

A lot of these big budget AAA "photorealism" games for PC are funded, at least partially, by Nvidia or AMD. They're the games you'll get for free if you buy their new GPU that month. Consoles are the same way. Did Bloodborne need to have shiny blood effects? Did Spiderman need to look better than real life New York? No, but these games are made to sell hardware, and the tradeoff is that the games don't have to make piles of money (even if some choose to include mtx anyway).

Until GPU manufacturers can find something else to strive for, I think we'll be seeing these incremental increases in graphical fidelity, to our benefit.

This is part of the problem, not a justification. You are saying that companies such Nvidia have so much power/money, that the whole industry must spend useless efforts into making more demanding games just to make their products relevants.

Right? "Vast wealth built on various forms of harm is good actually because sometimes rich people fund neat things that I like!" Yeah sure, tell that to somebody who just lost their house to one of the many climate-related disasters lately.

I'm actually disgusted that "But look, a shiny! The rich are good actually! Some stupid 'environment' isn't shiny cool like a videogame!" has over fifty upvotes to my one downvote. I can't even scrape together enough sarcasm at the moment to bite at them with. Just... gross. Depressing. Ugh.

Maybe it’s not what you’re saying but how you’re saying it.

Yeah, its too bad that that's always true for every game and not just ~30 AAA year.

Too bad Dave the Diver, Dredge, and Silksong will never get made 😔

So the advantage is that it helps create more planned obsolescence and make sure there will be no one to play the games in 100 years?

Is that a real question? Like, what are we even doing here?

The advantage is that game companies are paid by hardware companies to push the boundaries of gamemaking, an art form that many creators enjoy working in and many humans enjoy consuming.

"It's ultimately creating more junk so it's bad" what an absolutely braindead observation. You're gonna log on to a website that's bad for the environment from your phone or tablet or computer that's bad for the environment and talk about how computer hardware is bad for the environment? Are you using gray water to flush your toilet? Are you keeping your showers to 2 minutes, unheated, and using egg whites instead of shampoo? Are you eating only locally grown foods because the real Earth killer is our trillion dollar shopping industry? Hope you don't watch TV or go to movies or have any fun at all while Taylor Swift rents her jet to Elon Musk's 8th kid.

Hey, buddy, Earth is probably over unless we start making some violent changes 30 years ago. Why would you come to a discussion on graphical fidelity to peddle doomer garbage, get a grip.

reminder to be nice on our instance

Sorry hoss lost my cool won't happen again 😎

cheers m8 💜 it happens

To place another point on this: Control got added interest because their graphics were so good. Part of this was Nvidia providing marketing money that Remedy didn't have before, but I think the graphics themselves helped this game break through the mainstream in a way that their previous games did not. Trailers came out with these incredible graphics, and critics and laygamers alike said "okay I have to check this game out when it releases." Now, that added interest would mean nothing if the game wasn't also a great game beyond the initial impressions, but that was never a problem for Remedy.

For a more recent example, see Baldur's Gate 3. Larian plugged away at the Divinity: OS series for years, and they were well-regarded but i wouldn't say that they quite hit "mainstream". Cue* BG3, where Larian got added money from Wizards of the Coast that they could invest into the graphics. The actual gameplay is not dramatically different from the Divinity games, but the added graphics made people go "this is a Mass Effect" and suddenly this is the biggest game in the world.

We are definitely at a point of diminishing returns with graphics, but it cannot be denied that high-end, expensive graphics drive interest in new game releases, even if those graphics are not cutting-edge.

This comment is 100% on-point, I'm just here to address a pet peeve: Queue = a line that you wait in, like waiting to checkout at the store Cue = something that sets off something else, e.g. "when Jimmy says his line, that's your cue to enter stage left"

So when you said:

What you meant was:

haha thanks, i thought it felt off when i was typing it.

"We are definitely at a point of diminishing returns with graphics,"

WTF. How can you look at current NERF developments and honestly say that? This has never been further from the truth in the history of real time graphics. When have we ever been so close to unsupervised, ACTUALLY photo-realistic (in the strict sense) graphics?

Wizards of the Cost didn't give any funds to BG3, they actually paid for the IP.

I played Control on a 10 year old PC. I'm starting to think I missed out on something

I played it on PS5 and immediately went for the higher frame rate option instead.

I think Ghostwire Tokyo was a much better use of RT than Control.

Also found Ratchet and Clank to be surprisingly good for 30fps. I can't put my finger on exactly what was missing from the 60fps non RT version, but it definitely felt lesser somehow.

Machine Learning / AI has been a MASSIVE driver for Nvidia, for quite some time now. And bitcoin....