memes

Community rules

1. Be civil

No trolling, bigotry or other insulting / annoying behaviour

2. No politics

This is non-politics community. For political memes please go to [email protected]

3. No recent reposts

Check for reposts when posting a meme, you can only repost after 1 month

4. No bots

No bots without the express approval of the mods or the admins

5. No Spam/Ads

No advertisements or spam. This is an instance rule and the only way to live.

Sister communities

- [email protected] : Star Trek memes, chat and shitposts

- [email protected] : Lemmy Shitposts, anything and everything goes.

- [email protected] : Linux themed memes

- [email protected] : for those who love comic stories.

Ugh. Don’t get me started.

Most people don’t understand that the only thing it does is ‘put words together that usually go together’. It doesn’t know if something is right or wrong, just if it ‘sounds right’.

Now, if you throw in enough data, it’ll kinda sorta make sense with what it writes. But as soon as you try to verify the things it writes, it falls apart.

I once asked it to write a small article with a bit of history about my city and five interesting things to visit. In the history bit, it confused two people with similar names who lived 200 years apart. In the ‘things to visit’, it listed two museums by name that are hundreds of miles away. It invented another museum that does not exist. It also happily tells you to visit our Olympic stadium. While we do have a stadium, I can assure you we never hosted the Olympics. I’d remember that, as i’m older than said stadium.

The scary bit is: what it wrote was lovely. If you read it, you’d want to visit for sure. You’d have no clue that it was wholly wrong, because it sounds so confident.

AI has its uses. I’ve used it to rewrite a text that I already had and it does fine with tasks like that. Because you give it the correct info to work with.

Use the tool appropriately and it’s handy. Use it inappropriately and it’s a fucking menace to society.

I know this is off topic, but every time i see you comment of a thread all i can see is the pepsi logo (i use the sync app for reference)

You know, just for you: I just changed it to the Coca Cola santa :D

I gave it a math problem to illustrate this and it got it wrong

If it can’t do that imagine adding nuance

Ymmv i guess. I've given it many difficult calculus problems to help me through and it went well

Well, math is not really a language problem, so it's understandable LLMs struggle with it more.

But it means it’s not “thinking” as the public perceives ai

Hmm, yeah, AI never really did think. I can't argue with that.

It's really strange now if I mentally zoom out a bit, that we have machines that are better at languange based reasoning than logic based (like math or coding).

And then google to confirm the gpt answer isn't total nonsense

I've had people tell me "Of course, I'll verify the info if it's important", which implies that if the question isn't important, they'll just accept whatever ChatGPT gives them. They don't care whether the answer is correct or not; they just want an answer.

Well yeah. I'm not gonna verify how many butts it takes to swarm mount everest, because that's not worth my time. The robot's answer is close enough to satisfy my curiosity.

For the curious, I got two responses with different calculations and different answers as a result. So it could take anywhere from 1.5 to 7.5 billion butts to swarm mount everest. Again, I'm not checking the math because I got the answer I wanted.

That is a valid tactic for programming or how-to questions, provided you know not to unthinkingly drink bleach if it says to.

Meanwhile Google search results:

- AI summary

- 2x "sponsored" result

- AI copy of Stackoverflow

- AI copy of Geeks4Geeks

- Geeks4Geeks (with AI article)

- the thing you actually searched for

- AI copy of AI copy of stackoverflow

Should we put bets on how long until chatgpt responds to anything with:

Great question, before i give you a response, let me show you this great video for a new product you'll definitely want to check out!

Nah, it'll be more subtle than that. Just like Brawno is full of the electrolytes plants crave, responses will be full of subtle product and brand references marketers crave. And A/B studies performed at massive scales in real-time on unwitting users and evaluated with other AIs will help them zero in on the most effective way to pepper those in for each personality type it can differentiate.

"Great question, before i give you a response, let me introduce you to raid shadow legends!"

Google search is literally fucking dogshit and the worst it has EVER been. I'm starting to think fucking duckduckgo (relies on Bing) gives better results at this point.

Ive been using only duckduck for years now. If I don’t find something there, I dont need it.

I have been using Duck for a few years now and I honestly prefer it to Google at this point. I'll sometimes switch to Google if I don't find anything on Duck, but that happens once every three or four months, if that.

We have new feature, use it!

No, its broken and stupid, I prefer old feature.

... Fine!

breaks old feature even harder

Reject proprietary LLMs, tell people to "just llama it"

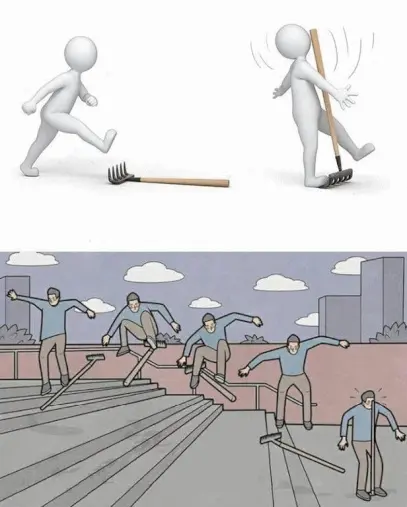

Top is proprietary llms vs bottom self hosted llms. Bothe end with you getting smacked in the face but one looks far cooler or smarter to do, while the other one is streamlined web app that gets you there in one step.

But when it is open source, nobody gets regularly slain and the planet progressively destroyed due to mega conglomerate entities automating class violence

Did you chatgpt this title?

"Infinitively" sounds like it could be a music album for a techno band.

Have they? Don't think I've heard that once and I work with people who use chat gpt themselves

I'm with you. Never heard that. Never.

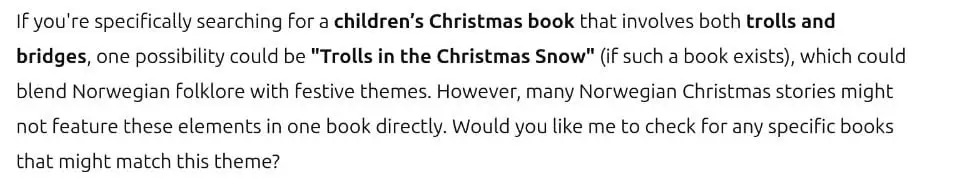

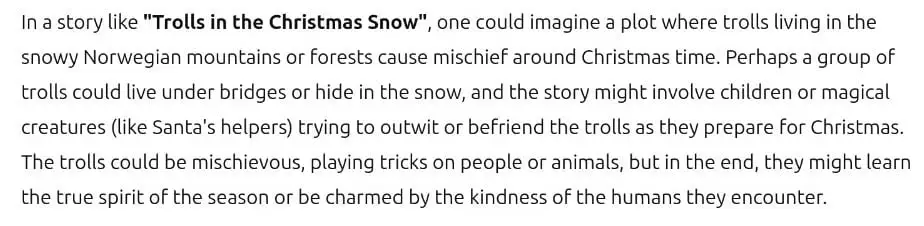

Last night, we tried to use chatGPT to identify a book that my wife remembers from her childhood.

It didn’t find the book, but instead gave us a title for a theoretical book that could be written that would match her description.

At least it said if it exists, instead of telling you when it was written (hallucinating)

Maybe it’s trying to motivate me to become a writer.

Maybe it’s trying to motivate me to become a writer.

How long until ChatGPT starts responding "It's been generally agreed that the answer to your question is to just ask ChatGPT"?

I'm somewhat surprised that ChatGPT has never replied with "just Google it, bruh!" considering how often that answer appears in its data set.

just call it cgpt for short

Computer Generated Partial Truths

Sadly, partial truths are an improvement over some sources these days.

Which is still better than "elementary truths that will quickly turn into shit I make up without warning", which is where ChatGPT is and will forever be stuck at.

Both suck now.

I have to say, look it up online and verify your sources.

GPTs natural language processing is extremely helpful for simple questions that have historically been difficult to Google because they aren't a concise concept.

The type of thing that is easy to ask but hard to create a search query for like tip of my tongue questions.

Google used to be amazing at this. You could literally search "who dat guy dat paint dem melty clocks" and get the right answer immediately.

I say, "Just search it." Not interested in being free advertising for Google.