this post was submitted on 21 Oct 2024

172 points (99.4% liked)

chapotraphouse

13538 readers

771 users here now

Banned? DM Wmill to appeal.

No anti-nautilism posts. See: Eco-fascism Primer

Gossip posts go in c/gossip. Don't post low-hanging fruit here after it gets removed from c/gossip

founded 3 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

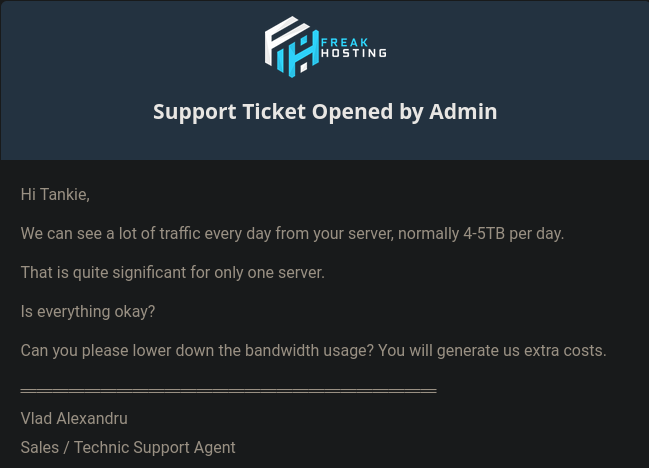

so I've never actually used nginx or made any application 0-1 so I can't help with the actual work, just general architecture advice since I only code for work

anyways, I think the nginx config I was talking about is

proxy_cache_min_usesSo the idea is that in real life content hosting, a lot of resources only get accessed once and never again for a long time (think some guy scrolling obscure context late at night cause they're bored af), so you don't want these to be filling up your cache

It will take a lot of time to develop but you can optimize for that fact that videos/context are often either 1 hit wonders like the aforementioned scenario or have short lived popularity. I.e. a video gets posted to hexbear, a hundred people view this video over 1 week so you want to cache it, but then after the post gets buried, the video fades back into obscurity, and so you don't want this thing to outlive its usefulness in the cache

There are some strategies to do this. This new FIFO queue replacement policy deals with the 1 hit wonder situation https://dl.acm.org/doi/pdf/10.1145/3600006.3613147

Another strategy you can implement, which is what YouTube implements, is that they use an LRU cache, but they only cache a new item when a video gets requested from 2 unique clients and the time between those 2 requests are shorter than the last retrieved time of the oldest item in the LRU cache (which they track in a persistent metadata track along with other info. you can read the paper above to get an idea of what a metadata cache would store). They also wrote a math proof that supports this algorithm being better than a typical LRU cache

Also I assume nginx/you are probably already going this but caching what a user sees on the site before clicking into a video should all be prioritized over the actual videos. I.e. thumbnails, video titles, view count, etc. Users will scroll through a ton of videos but only click on a very few amount of videos, so you get more use of the cache this way

I'll try to dig through my brain and try to remember other optimizations YouTube does that is also feasible for tankie tube and let you know as many details as I can remember. This is all just my memory from reading their internal engineering docs when I used to have access. Most of it is just based on having a fuckload of CDNs around the entire world and the best hardware though

That's a good idea for the caching strategy, thanks! I'll research how to implement it in nginx.

As for your professional friend, right now I'm more limited by money than time or technical skills, so I'm going to hack away for a little longer.