this post was submitted on 28 May 2024

141 points (100.0% liked)

chapotraphouse

13392 readers

881 users here now

Banned? DM Wmill to appeal.

No anti-nautilism posts. See: Eco-fascism Primer

Vaush posts go in the_dunk_tank

Dunk posts in general go in the_dunk_tank, not here

Don't post low-hanging fruit here after it gets removed from the_dunk_tank

founded 3 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

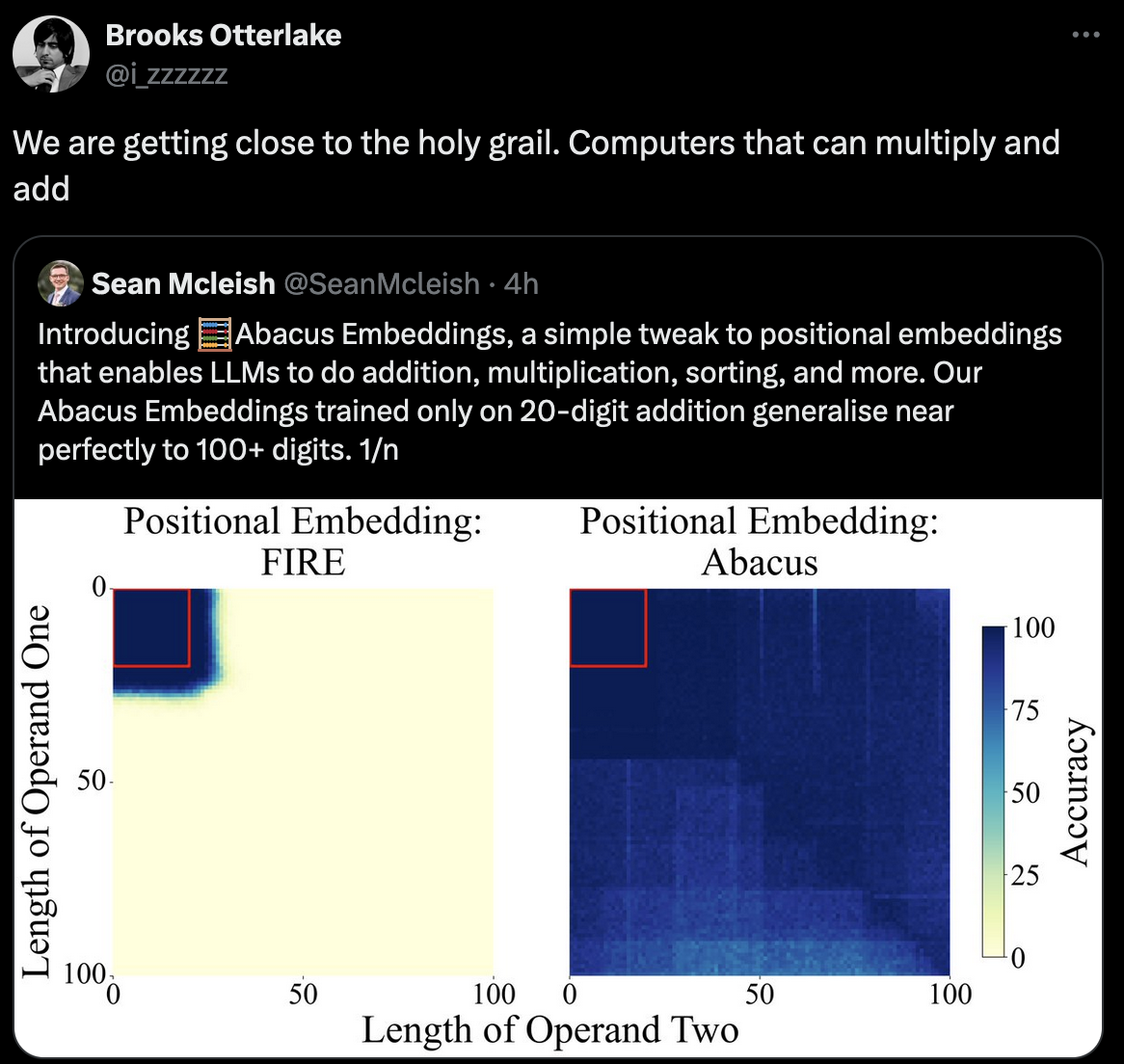

Hi, I do AI stuff. This is what RAG is. However, its not really teaching the AI anything, technically its a whole different process that is called and injected at an opportune time. By teaching the AI more stuff, you can have it reason on more complex tasks more accurately. So teaching it how to properly reason through math problems will also help teach it how to reason through more complex tasks without hallucinating.

For example, llama3 and various Chinese models are fairly good at reasoning through long form math problems. China probably has the best math and language translation models. I'll probably be doing a q&a on here soon about qwen1.5 and discussing Xi's Governance of China.

Personally, I've found llms to be more useful for text prediction while coding, translating a language locally (notably: with qwen you can even get it to accurately translate to english creoles or regional dialects of Chinese without losing tone or intent, it makes for a fantastic chinese tutor), or writing fiction. It can be OK at summarizing stuff too.

Please tag me when you make that post. The wider community (the llm people, not hexbear) seems very negative about Chinese models and I'd be very interested in a different perspective.

Is it called llama because of the meme?

Llama (acronym for Large Language Model Meta AI, and formerly stylized as LLaMA)

From Wikipedia